‘Natural Bridge, Virginia’ Painting by David Johnson 1860, Credit: Public Domain

‘Natural Bridge, Virginia’ Painting by David Johnson 1860, Credit: Public Domain

Progress of AI in 2018

The self-learning capacity of deep-learning networks continues to improve. There is hardly any scientific discipline left that does not engage deep-learning in data analytics to enhance quality and progress of its research activities. In the business-services sector, deep-learning is employed to streamline administrative processes and to support analytical tasks in planning and decision making. According to several studies conducted by McKinsey, the penetration rate of deep-learning varies greatly between business segments, with an average of about 15% of the potential for automation so far realized. Intercompany problems related to management’s poor comprehension of AI and the lack of trained human resources are reasons cited for this low adaption rate. New software tools and cloud-services introduced by Google, Amazon, Microsoft and IBM are aimed at solving the resource problem. In the industrial and logistics sector, the implementation of intelligent robots, partially combined with new production methods like 3-D printing, is reducing personnel costs below ‘cheap-labour’ levels, causing disruption in developing countries with low wage levels. Pushing innovation in manufacturing and design, GANs, or ‘Generative Adversarial Networks’ have become a widely used tool to create new visual und physical objects including Belamy, a GAN generated portrait which was auctioned at Christie’s late this year for USD 435’000.-. Yann LeCun, Professor at NYU and Facebook’s chief AI scientist, has called GANs “the coolest idea in deep learning in the last 20 years.” Another AI luminary, Andrew Ng, the former chief scientist of China’s Baidu, says GANs represent “a significant and fundamental advance” that has inspired a growing global community of researchers. The magic of GANs lies in the rivalry between two neural nets. Both networks are trained on the same data set. The first one, known as the generator, is charged with producing artificial outputs that are as realistic as possible. The second, known as the discriminator, compares these with genuine information (typically images) from the original data set and attempts to determine which are real and which are fake. Based on those results, the generator adjusts its parameters until the discriminator can no longer tell what is genuine and what is artificially generated.

Problems emerging in 2018

The raising complexity of deep-learning algorithms and networks is beginning to backfire as a lack of transparency generates distrust and concern regarding the ethical implications of possible errors in the results computed. IBM’s Watson deep-learning software, providing medical advice and support-services to doctors, has experienced serious set-backs largely due to the insufficient quality of the datasets that were used for training the system. GAN’s capacity to generate ‘artificial faces’ which no longer can be distinguished from real humans are a serious ‘fake-threat’ in internet-communications. A machine designed to create realistic fakes is a perfect tool for purveyors of fake news wanting to influence everything from stock prices to elections. Probably the biggest blow to trust in internet-communications was generated by Facebook with numerous violations of leaking private data for commercial and political reasons. To topple this waning trust in social media, cybercrime associated with theft of data or intruding life-supporting infrastructures like water- or energy-supply, has reached a threat-potential capable of causing a major disruption to a booming AI-product and service-industry. Within the consumer sector, deep-learning applications such as natural language processing or image recognition offered as ‘free-services’ in exchange for private customer-data, are meeting growing resistance from regulatory government institutions. Europe’s new general data protection regulation (GDPR) is just the beginning of extensive efforts to regulate the potential misuse of internet-services. Antitrust regulation, originally implemented as a barrier against free-market domination, has failed, threatening a fair distribution of wealth. There is increasing concern among social and political institutions that our free-market economy might deteriorate, causing potential upheavals similar to the 1920’s when industrialization was causing ‘slave-like’ working and living conditions for many, stipulating the need for human rights and ethical rules for a functioning society.

How to proceed in 2019 and beyond

Next to the regulatory and ethical issues which will continue to be discussed by government as well as social institutions well into 2019 and beyond, we are likely to witness efforts to restore trust both in respect to AI applications as well as internet-communications providing the backbone for these applications:

- Provide secure communication to protect private and corporate data and to provide secure access for anyone across the entire globe at high-speed.

- Move from a purely technology-driven AI to a human centric AI, where AI augments humans to reap the potential benefits of AI while adhering to ethical standards as part of the application.

Secure communication:

Adding security to the internet-infrastructure is primarily a task of identifying the weakness and intrusion potential in existing applications and closing respective loopholes. Taking a longer-range view, it seems likely that an entire new technological concept will be necessary. One solution, dubbed as quantum-internet, exploits the unique effects of quantum physics, which is fundamentally different to the classical Internet we use today. This type of network already exists as prototypes, most notably in China, where a quantum-internet extends over some 2,000 kilometres and connects major cities including Beijing and Shanghai. A prominent team of quantum-internet researchers at the Delft University of Technology in the Netherlands has released a roadmap laying out the stages of quantum-network implementation, detailing the technological challenges that each tier of the roadmap would involve. In stage 1, users will enter the quantum process, in which a sender creates quantum states, typically for photons. These would be sent to a receiver, either through an optical fibre or using a laser pulse beamed across open space. At this stage, any two users will be able to create a private encryption key that only they know. In stage 2, the quantum internet will harness the powerful phenomenon of entanglement. Its first goal will be to make quantum encryption essentially unbreakable. Most of the techniques that this stage requires already exist, at least as rudimentary lab demonstrations. Last year, physicists at MIT and the University of Vienna provided strong evidence of the existence of quantum entanglement, the seemingly far-out idea that two particles, no matter how distant from each other in space and time, can be inextricably linked in a way that defies the rules of classical physics. Stages 3 to 5 will, for the first time, enable any two users to store and exchange quantum bits, or qubits. These are units of quantum information, similar to classical 1s and 0s, but which can be in a superposition of both 1 and 0 simultaneously. Qubits are also the basis for quantum computation which already exists at the experimental level. Achieving the final stage will require ‘quantum-repeaters’ — devices that can help to entangle qubits over larger and larger distances.

Human Centric AI:

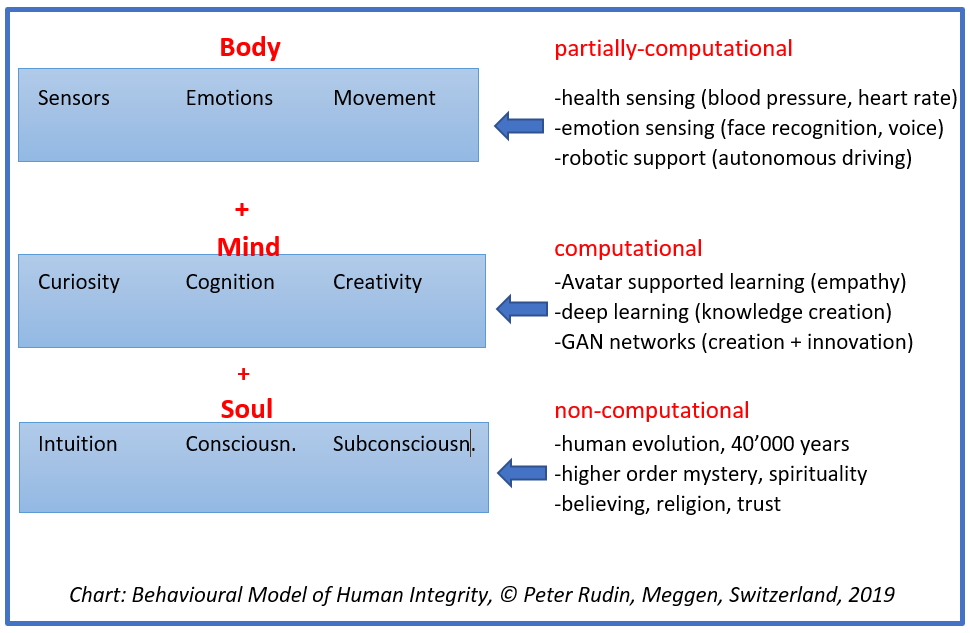

There is agreement among most experts that AI should augment humans as a tool in order to advance humanity to the next level of evolution. Several initiatives and organizations have emerged like ‘AI Now’ or ‘The Future of Life Institute’ which address human concerns in respect to the human centric application of AI. In order to advance this goal, one must agree on the characteristics that make humans unique vis-à-vis AI and how AI can provide support to this uniqueness. Instead of asking how AI can replace humans, the more important question to be raised is how AI can support humans in their intrinsic uniqueness to achieve a common goal. To contemplate this question, we need a philosophical model which addresses the psychological and physical characteristics of the human being. The following graph is a thought-model providing such a definition:

Descartes ‘Mind-Matter Dualism’ has led to a heavily science- and technology-oriented view of humanity. However, experiencing ‘Body + Mind + Soul’ as an integrating human capacity, is one of the fundamental drivers of human behaviour. In respect to our ‘Mind’ AI should be used where computational means can improve human limitations such as memory capacity, learning ability or knowledge generation and innovation. Once issues such as ‘common sense’ or ‘semantic understanding’ are resolved, AI- machines will have reached a level of intelligence equivalent to the biological intelligence of humans. In respect to our ‘Body’, biotechnology is making great strides to fight-off cancer or to reduce the threat of diabetes and heart failure. However, to understand the functioning of the human brain, cracking the neural code, is still a long way off. In fact, it might remain a mystery like consciousness or intuition, positioned outside the realm of computational interpretation. Consequently, the human body remains a partially-computational target for the application of AI. Intuition, consciousness and subconsciousness remain an area where computational efforts so far have failed. As some scientists, like Christof Koch from the Allen Institute for Brain Science have stated, it appears that we currently lack the computational tools to advance research in the domain defined as ‘Soul’.

Conclusion

We do not yet know or understand how AI will eventually impact human behaviour on issues such as decision making. We do know however that the value of humans vis-à-vis intelligent machines is their capacity to integrate mind, body and soul, something intelligent machines cannot achieve. The challenge our society faces is twofold: on the one hand we must advance self-knowledge along the components described by the model and, on the other, we have to learn how best to support this goal through the application of AI utilizing computational means.

Contrasting this view, in his 2019 New Year’s essay, published by Edge.org, the historian George Dyson makes the following comment:

“Nature’s answer to those who sought to control nature through programmable machines is to allow us to build machines whose nature is beyond programmable control. The next revolution will be the ascent of analogue systems over which the dominion of digital programming comes to an end. Those seeking true intelligence, autonomy, and control among machines, the domain of analogue computing, not digital computing, is the place to look.”

Lieber Peter

ich bin froh, dass für einmal alle ernsthaften Bedenken betr. KI zur Sprache kommen. Wie du sagst, – Missbrauch untergräbt das Vertrauen in die KI. Und je technischer die Forscher sind, desto weniger sind sie sich der ethischen Implikationen und des potentiellen Missbrauchs bewusst – wie wir schon bei den Entwicklern der Atombombe gesehen haben.

Ich bin in einem andern Diskussionskreis, dem “autopoetischen Kreis” dort diskutieren wir seit dem letzten Mal auch die KI. Mit dabei ist Jürg Tödtli, den du am NZZ-Forum über KI kennen gelernt hast. Er hat einen grossen Teil seines Berufslebens mit Automatisierung, Automatik und Roboterisierung verbracht. Das nächste Mal, am Mi, 9.1. werden wir über die kontroversen Themen betr. KI diskutieren. Falls du Zeit und Lust hast, bist du natürlich sehr willkommen.

Deine Grafik betr. Body-mind-soul – ein ewiges Thema, das auch ich gründlich im Rahmen meiner Kinesiologie-Ausbildung (teilweise bei einem Physiker und Chemiker, der die Wirkung der Kinesiologie mit Quantentheorie erklärte) studiert habe. Nach meiner Ansicht und Erfahrung sind die Drei nicht trennbar, sondern beeinflussen sich ja immer wieder gegenseitig, wie jeder, der in der Meditation den Zustand der Leere und des All-Bewusstseins zu erreichen versucht,bestätigen kann. Und auch Du weisst sicher, dass man bei Krankheit oder grossem emotionalen Stress nicht mehr klar denken kann….. An diesem nächsten Mittwoch werden wir auch diskutieren – was passiert mit uns und der KI – was macht die Technik mit uns?

Oder ein kurzer Feedback meines Neffen, der eine Anstellung als IT-Werkstudent sucht – er musste stundenlang (ca. 5 Std.) damit verbringen, ein Skype-Interview mit einem Roboter zu führen – mit einem Menschen wäre das ganze in max. einer halben Stunde möglich gewesen… wie sinnvoll ist das ? Wie beeinflusst solches die Einstellung des Publikums?

Mit herzlichem Gruss, auch an Gertie, und guten Wünschen zum Neujahrsbeginn,

Katharina Gattiker