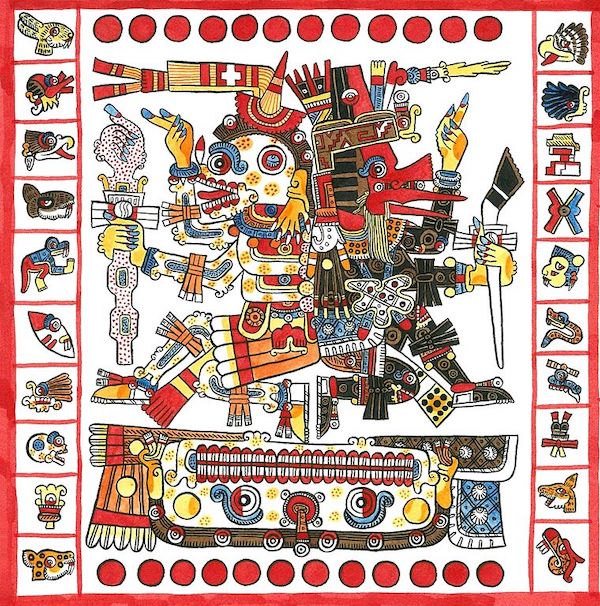

Codex Borgia: God of the Underworld Picture Credit: Wikimedia

Codex Borgia: God of the Underworld Picture Credit: Wikimedia

Introduction

Artificial General Intelligence (AGI) can be defined as the ability of a machine to perform any task that a human can. Although existing applications highlight the ability of AI to perform tasks with greater efficiency than humans, they are not generally intelligent, i.e., they are exceedingly good at only a single function while having zero capability to do anything else. While today’s ‘narrow AI’ systems have to be trained with massive volumes of training data, humans learn with significantly fewer learning experiences. An AGI system will not only learn with relatively less training data but will also apply the knowledge gained from one domain to another. Such a capability will make the learning process of artificially intelligent systems similar to that of humans, drastically reducing the time for training while enabling the machine to gain multiple areas of competency. Issues such as ‘common sense’ or applying ‘theory of mind’ represent major challenges to move from ‘narrow AI’ to AGI. Ongoing AI research is focused on artificial neural networks, neural biology and neural philosophy. Many researchers believe that AGI will be reached sometime this century, others consider AGI to be a myth. Reflecting on theory of mind provides one way of testing AGI’s viability.

Theory of mind

Theory of mind assumes that others have minds analogous to one’s own, and this assumption is based on the reciprocal, social interaction, as observed in joint attention, the functional use of language, and the understanding of others’ emotions and actions. Having theory of mind allows one to attribute thoughts, desires, and intentions to others, to predict or explain their actions, and to posit their intentions. Theory of mind appears to be an innate potential ability in humans that requires social and other experience over many years for its full development. Theories of cognitive development maintain that theory of mind is a by-product of a broader hypercognitive ability of the human mind to register, monitor, and represent its own functioning. Empathy is a related concept, meaning the recognition and understanding of the states of mind of others, including their beliefs, desires and particularly emotions. This is often characterized as the ability to “put oneself into another’s shoes”. While empathy is known as emotional perspective-taking, theory of mind is defined as cognitive perspective-taking. Social neuroscience has also begun to address this debate, by imaging the brains of humans while they perform tasks demanding the understanding of an intention, belief or other mental state in others. By being able to develop accurate ideas about what other people are thinking, we are better able to respond accordingly.

Development of one’s theory of mind

The greatest growth of this ability to attribute mental states is believed to take place primarily during the preschool years between the ages of 3 and 5. However, a number of different factors are believed to exert some influence on the development of a theory of mind. Some researchers have suggested that gender and the number of siblings in the home can affect how theory of mind emerges. Theory of mind develops as children gain greater experience with social interactions. Play and relationships with parents and peers allow children to develop stronger insight into how other people’s thinking may differ from their own. Social experiences also help children to learn more about how thinking influences actions. The growth of theory of mind skills tends to improve progressively and sequentially with age. While many theories of mind abilities emerge during the preschool years, research has shown that children between the ages of 6 and 8 are still developing these skills. One study found that children typically progress through five different theory of mind abilities in a sequential, standard order. These tasks of understanding, from easiest to most difficult, are:

- Reasons to want something (i.e. desires) may differ from one person to the next

- People can have different beliefs about the same thing or situation

- People may not comprehend or have the knowledge that something is true

- People can hold false beliefs about the world

- People can have hidden emotions, or they may act one way while feeling another way

The ability to detect others’ thinking is critical to human cognition and social interaction; it allows us to build and maintain relationships, communicate effectively, and work cooperatively to achieve common goals. This ability is so important that when it is disrupted, as we see in some cases of autism, essential mental functions like language learning and imagination become impaired.

Simulating theory of mind with AI

Recognizing other minds comes effortlessly for humans, but it is no easy task for a computer. Computers equipped with a theory of mind would recognize you as a conscious system with a mental world of your own. We often forget that minds are not directly observable and are, objectively speaking, invisible. As such, programming a computer to understand that an electrified piece of tissue called the brain has a rich inner subjective world is a challenging computational task, to say the least. Techniques like deep learning are not sufficient for mentalizing. Today’s greatest AI systems may be able to do some very sophisticated things, but they do not have the basic features of a theory of mind. Until neuroscientists understand the physical mechanisms underlying qualitative, subjective experience, theory of mind will likely remain an unsolved programming and engineering feat. Nevertheless, a starting point creating robots or screen-based avatars that can mentalize like humans is to mirror the development of theory of mind in children. Both clinical and lab research shows that social interaction begins with the formation of basic mechanisms of attention. This is because the focus of attention parallels the focus of the mind. While these basic social cues and others such as pointing gestures and head nods are critical to the foundation of a theory of mind, equally important is the ability to recognize basic emotional expressions including information about desires, dislikes, and fears. There has been progress in the interpretation facial or language expressions through emotion sensing. However, we are still far from connecting this sensory information to a theory of mind.

DeepMind’s ‘ToMnet’, a computational model of theory of mind

Anyone who has experienced a frustrating interaction with Siri or Alexa knows that digital assistants do not replicate humans. What they need is an awareness of others’ beliefs and desires. “Theory of mind is clearly a crucial ability for navigating a world full of other minds” says Alison Gopnik, a developmental psychologist at the University of California, Berkeley. Some of today’s AI systems can label facial expressions such as “happy” or “angry”—a skill associated with theory of mind—but they have little understanding of human emotions or what motivates us. Many algorithms used by AI aren’t fully written by programmers, but instead rely on the machine-learning. The resulting computer-generated solutions are often black boxes, with algorithms too complex for human insight. Responding to this limitation, Neil Rabinowitz, a research scientist at DeepMind, together with colleagues has created a machine theory of mind called “ToMnet”. Observing other AI systems to see what it could learn about how they work, ToMnet comprises three neural networks, each made of small computing elements and connections that learn from experience, loosely resembling the human brain. The first network learns the tendencies of other AI’s based on their past actions. The second forms an understanding of their current “beliefs.” And the third takes the output from the other two networks and, depending on the situation, predicts the AI’s next moves. Gopnik says that ToMnet can predict other AI’s behaviour based on what they know about themselves, but that still does not put them on the same level as human children. Josh Tenenbaum, a psychologist and computer scientist at MIT, has also worked on computational models of theory of mind capacities. He says ToMnet infers beliefs more efficiently than his team’s system, which is based on a more abstract form of probabilistic reasoning rather than neural networks. But ToMnet’s understanding is more tightly bound to the contexts in which it is trained, he adds, making it less able to predict behaviour in radically new environments, as his system or even young children can do.

Future Directions

To Alan Winfield, a professor in robotic ethics at the University of West England, theory of mind is the secret element that will eventually let AI “understand” the needs of people, things, and other robots or Avatars. “The idea of putting a simulation inside a robot is a really neat way of allowing it to actually predict the future,” he said. Unlike machine-learning, in which multiple layers of neural nets extract patterns and “learn” from large datasets, Winston is promoting something entirely different. Rather than relying on learning, AI would be pre-programmed with an internal model of itself and the world that allows it to answer simple “what-if” questions. For example, when navigating down a narrow corridor with an oncoming robot, the AI could simulate turning left, right, or continuing its path and determine which action will most likely avoid collision. This internal model essentially acts like a “consequence engine,” says Winston, a sort of “common sense” that helps instruct its actions by predicting those of others around it. Eventually Winston hopes to endow AI with a sort of story-telling ability. The internal model that the AI has of itself and others lets it simulate different scenarios, and—crucially—tell a story of what its intentions and goals were at that time. This is drastically different from deep learning algorithms, which normally cannot explain how they reached their conclusions.

Conclusion

Without some sort of radical breakthrough in design, sentient machines that can comprehend and communicate empathy and emotions will remain science fiction. It is improbable that a computer with a mind or a theory of mind will just suddenly appear due to increases in processing power and speed. However, we can expect that intense research in artificial neural networks combined with advances in neuroscience will steadily improve AI to the point where the effort to realize new applications will be significantly facilitated both in respect to cost as well as time labelling data. What remains however, are neuro-philosophical issues, for example if consciousness or theory of mind can be computationally modelled to deliver humanlike intelligence. Life is analogue not digital. AGI approximates reality. The idea that digital intelligence can reach biological intelligence is likely to remain a myth. Our challenge is to augment life with the opportunities and benefits digital intelligence can provide.