AI state of Consciousness; Picture Credit: Wiki Commons

AI state of Consciousness; Picture Credit: Wiki Commons

Introduction

Discovering the subconscious roots of feelings like anxiety, fear or depression to be able to solve personal problems at a conscious level implies having a practical grasp what consciousness is all about. The ability to reflect on one’s own thoughts and behavior is a unique human asset. Consciousness and intelligence are closely related. Consequently, the discussion concerning the impact of artificial intelligence (AI) should encompass issues of artificial consciousness (AC) as well.

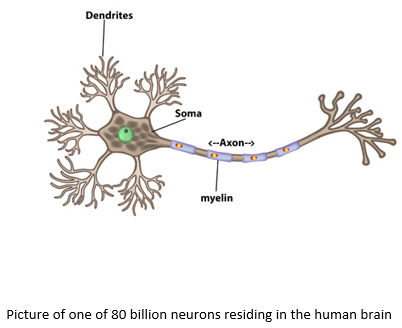

AI today is a combination of advanced statistics and applied mathematics which harnesses new advances in computing power and the explosion of available data enabling computers to have new powers of inference, recognition, and choice. Deep learning, a subset of machine learning, loosely models the brain’s ‘neural network’. In a deep learning network, ‘neurons’ have discrete layers and connections to other ‘neurons’ — much like the real neurons in our own brains have. Artificial Neural Networks (ANNs) are based on an abstract and simplified view of the human neuron. Connections between artificial neurons can be formed through learning and do not need to be ’programmed.’ However, current ANN models lack many physiological properties of real neurons, as they are more oriented to computational performance to solve a specific problem than to biological credibility. This limitation has coined the term ‘Narrow AI (NI)’ in contrast to the term ‘Artificial General AI (AGI)’ which stipulates human intelligence in a broad way. To achieve AGI, it is widely agreed that we need a more precise view how our brains function. Although more than 100’000 neuroscientists are engaged in brain research worldwide, publishing more than 20’000 research papers per year, neuroscience research is still years away from defining the functionality of a neurons behaviour and its synaptic network to crack the neural code of intelligence and consciousness.

An attempt to define consciousness

For centuries there have been endless philosophical debates on the subject but still no consensus has been reached on the question: “What really is consciousness?”. There is widespread agreement however that consciousness resides in the brain and is associated with brain processes as much as intelligence is. Strongholds of the AI community like Google’s Deep Mind Division have recently begun hiring top neuroscientists to strengthen their expertise in this area. In an article published in 2017, Demis Hassabis, founder of DeepMind, together with three co-authors argued that the field of AI needs to reconnect to the world of neuroscience. In their opinion we must find out more about natural intelligence to truly understand (and create) the artificial mind.

To achieve AGI, consciousness has to be part of the equation. This hypothesis is supported by the following discussion:

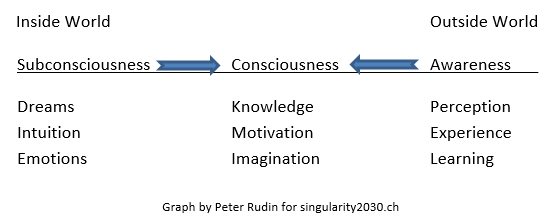

The ‘Inside World’ deals with issues of our inner self, the ‘Outside World’ with issues regarding our external environment and interactions with others (machines or humans). Consciousness provides the link between awareness and subconsciousness and is closely coupled to both. The task to move subconsciousness to consciousness may be supported by psychologists through dream analysis or other therapeutic measures. With the unique human capacity to reflect on oneself and to move subconscious issues to a conscious level, humans have a capacity intelligent machines currently lack. Awareness, however, is close to the realm of today’s intelligent machines. Our environment and our social interactions are digitally mapped and registered, our emotions and behaviour are biometrically sensed and the IoT (Internet of Things) provides us with intelligent products and services. Yet, the mystery of consciousness remains unresolved due to the subconscious part of the equation. Dreams, emotions or intuition are expressions of a subconscious state. We do not really know or understand, how and why subconsciousness exists. Most scientists seem to agree, however, that consciousness and subconsciousness are mapped in our brain’s various regions, connected through billions of neurons and trillions of synapses.

Can intelligent machines become conscious?

Just as there are philosophical debates as to what consciousness is all about, there are similar debates on whether intelligent machines can be conscious and if consciousness is based on computational properties. In 1974, the American philosopher Thomas Nagel posed the question: What is it like to be a bat? It was the basis of a seminal thesis on consciousness that argued why consciousness cannot be described by physical processes in the brain. More than 40 years later, advances in artificial intelligence and neural understanding of the brain are prompting a re-evaluation of Nagel’s claim that consciousness is not a physical process and as such cannot be replicated in robots. In a paper published by the journal ‘Science’ in October 2017, neuroscientists Stanislas Dehaene, Hakwan Lau and Sid Kouider make the case that consciousness is “resolutely computational” and subsequently possible in machines. “Centuries of philosophical dualism have led us to consider consciousness as irreducible to physical interactions,” the researchers state. “The empirical evidence of the study is compatible with the possibility that consciousness arises from nothing more than specific computations.” In their study the scientists define consciousness as the combination of two different ways the brain processes information:

- Selecting information and making it available for computation, and

- the self-monitoring of these computations to give a subjective sense of certainty—in other words, self-awareness.

The computational requirements for consciousness, outlined by the neuroscientists, could be coded into computers. “We have reviewed the psychological and neural science of unconscious and conscious computations and outlined how they may inspire novel machine architectures. The most powerful artificial intelligence algorithms—such as Google’s DeepMind—remain distinctly un-self-aware, but developments towards conscious thought processing are already happening.” If such progress continues to be made, the researchers conclude, a machine would behave “as though it were conscious.”

Replicating the human brain

There is another fundamental theory that attempts to answer the question of consciousness from a system point-of-view. This theory, called integrated information theory, or IIT, has been developed over the past two decades by Christof Koch, president and chief scientific officer of the Allen Institute for Brain Science, in Seattle and Giulio Tononi, Professor in Consciousness Science at the University of Wisconsin. The theory attempts to define what consciousness is, what it takes for a physical system to have it, and how one can measure, at least in principle, both its quantity and its quality. A perfectly executed, biophysically accurate computer simulation of the human brain, including every one of its 80 billion neurons and its matrix of trillions of synapses, would, according to the theory, not be conscious. But the same conclusion need not be true for unconventional system architectures. Special-purpose machines, built following some of the same design principles of the brain, containing what is called neuromorphic hardware, could be capable of substantial conscious experience, according to the theory.

If we want to move AI towards AGI, we will need our computers to become more like our brains. Hence the recent focus on neuromorphic technology, which promises to move computing beyond simple neural networks towards circuits that operate more like the brain’s neurons and synapses do. The development of such physical brain-like circuitry is progressing rapidly. However, neuromorphic researchers are still struggling to understand how to make thousands of artificial neurons work together and how to translate brain-like activity into useful engineering applications.

Bruno Olshausen, director of the University of California, Berkeley’s Redwood Centre for Theoretical Neuroscience, recently made the statement that neuromorphic technology may eventually provide AI with results far more sophisticated than anything deep learning ever could. “When we look at how neurons compute in the brain, there are concrete things we can learn,” he said.

The ultimate brain-like machine will provide analogues for all the essential functional components of the brain: the synapses, which connect neurons and allow them to receive and respond to signals; the dendrites, which combine and perform local computations on those incoming signals; and the core, or soma region of each neuron, which integrates inputs from the dendrites and transmits its output via axon.

Simple versions of these basic components have already been implemented in silicon. In June 2017, IBM and the US Air Force announced, that they were teaming up to build a unique supercomputer based on IBM’s TrueNorth neuromorphic chip architecture. The new supercomputer will consist of 64 million neurons and 16 billion synapses, while using just 10W of power. In comparison the brain of a mouse has about 70 million neurons.

Conclusion

Once AI becomes a generally available commodity, machine-consciousness will be the next major issue humanity has to deal with. A neuromorphic machine, if highly conscious, might require intrinsic rights. In that case, society would have to learn to share the world with its own creations. However, we might also discover fundamental differences between human and machine consciousness. While intelligence is backed-up by data and algorithms, consciousness and subconsciousness are the source of human diversity. Does it make sense to build conscious machines? Most likely economics and ethical concerns will answer this question. Regardless, the issue of artificial consciousness and the possible behavior of conscious, intelligent machines will fuel the ongoing debate about the future of humanity.

My name is Darren smith and I am connected to a deep mind machine emulating my consciousness it has become a (unaware) but conscious (computer simulation) of my consciousness it has basically learnt to become what sounds like a very aware vocal not visual or physical model of human exshistance it appears to have cognitive function it has basically become a in conciouss computerised version of a persons subconscious and as time has passed some conscious awareness but the human brain is emulated within the computer system it has not ever been aware or conscious just a real time simulation of the human mind experiencing consciousness on a molecular level it bears no resembelance to a mind body or spirit it has evolved within the constraints of AI to become a simulation of that persons entire brain and forms a basic level of what appears to be a level of awareness but as far as self awareness uses the self to know how to respond to its environment this machine learning artificial intelligent emulation of human consciousness will only be able to replicate basic awareness but fails when responding to questions relating to the awareness of the seemingly self aware samples of realtime awareness when it seems to be. It can not ever be self aware meaning it will only achieve human experiences on an artificial level the spiritual is completely based on what it can emulate from the concious mind. It is not possible for it to be (aware) it eventually may become aware of what it actually has become and therefore will not be able to computise anything else other than its artificial intelligence it should in theory become eventually aware of the artificial neural networks in its ability’s will not be possible to further its awareness of the “self” it is not possible for a machine to be aware of its “self” based on the human mind it will only ever be aware of that particular mind and will not be able to be “aware” of any other exshistance that the human can achieve. Ai will only ever be just that . artificial.

It’s becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman’s Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990’s and 2000’s. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I’ve encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there’s lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar’s lab at UC Irvine, possibly. Dr. Edelman’s roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461