Picture Credit: Charlie Chaplin in the movie ‘Modern Times’ (1936)

Picture Credit: Charlie Chaplin in the movie ‘Modern Times’ (1936)

Introduction

Imagine you are watching an educational TV-Show with a well-known expert explaining the history of the Roman Empire. By pressing a designated button on your remote control or touching a symbol on your tablet, the expert goes into conversation mode, offering to answer questions you might have. You have switched from a one-way presentation to an interactive AI-Machine – conversation. The person you see is a human-like copy of the same expert but now you can talk to him via built-in microphone and the switched-on camera of your device (TV, PC, Tablet or Smart-Phone). Responding to your questions, the expert’s movements and facial expressions are guided by AI-Software. If the answer has a funny note, he will smile at you, if he talks about the atrocities of war, his expression is likely to show sadness. Soon you realize that his answers are exceptionally well presented, and you begin to realize that you are talking to one the world’s best human-like experts regarding the history of the Roman Empire. His knowledge far exceeds what you could expect from a human individual. You realize that he is emotionally responding to your questions. He senses the tone of your voice and can analyse your facial expressions. He will sense if you have not fully understood his explanation to a question you posed, slow down the speed of his response and ask you in what respect you have difficulty in understanding his answers. Slowly you begin to develop an empathic bond with this human-like Avatar and you realize that your interest and comprehension about the history of the Roman Empire has advanced significantly beyond the one-way presentation of a TV-show.

Smart Speakers at the forefront of Avatar Services

Today’s conversational support-services and assistants are voice-activated. Based on natural language processing and speech recognition, these so-called ‘bots’ provide voice and text answers to questions submitted via smart speakers or smart phones. The voice answers can be modulated to add a touch of empathy to the user’s current emotional disposition. What started as ‘Siri’ with the iPhone years ago, has opened the door to Amazon Alexa and Google Home. In October 2018, Amazon patented a technology that it says would allow its AI assistant to analyse a user’s voice and infer everything from their health status to their likely nation of origin. With this technology applied, Alexa could hear that a user coughs between words and offer to order flu medicine for him. So far, voice assistants and smart speakers have proven to be popular ways to stream music, get weather information, dim the lights or operate home appliances. But to be revolutionary, they will need to find a greater calling — a new, breakout application. While people are certainly enthusiastic about the new technology, it’s not exactly life-changing yet.

Amazon is the smart speaker leader for now, though it is somewhat limited by not having its own smart-phone product. The company is making up for that deficit by empowering other household devices with voice-response capabilities. It recently unveiled a low-cost Alexa-enabled chip that can make stupid devices smart. As the holiday shopping season approaches, voice-powered smart speakers are again expected to be big sellers, adding to the approximately one-quarter to one-third of the U.S. population that already owns a smart speaker. Voice interfaces have been adopted faster than nearly any other technology in history. As of May 2018, 62 percent of U.S. smart speaker owners had an Amazon Alexa, while 27 percent had a Google Home. These statistics favour Amazon by counting devices purchased in the past. But Google is rapidly gaining ground, tripling its growth rate in the first half of 2018. Research firm Canalys estimates that smart speakers will reach a global installed base of 100 million in 2018. That will reflect an annual growth of 2.5 times over 2017. By 2020, the firm expects the smart speaker installed base to more than double again to 225 million and continue rising to more than 300 million by 2022. That would reflect about 8 times growth over five years.

Technology Breakthroughs required for future Avatar Assistants

Voice assistants are good at answering one-off questions: “What’s the capital of North Dakota?” or, “What time is our dinner appointment tonight?” But their ability to follow a line of thought or figure out what a pronoun is referring to is limited. Today’s voice assistants are capable of three or four back-and-forth interactions at most, barring it from having a true conversation, though it is incrementally improving as machine-learning improves the response. Smart speakers are making people more comfortable talking to their devices. However, the future of voice will probably not be relative to speakers alone. The major speaker makers have all added screens to their assistants. They have ‘the foot in the door’ to take the next step as a data-driven Singularity will become reality. Getting there, two technological breakthroughs are required:

- Semantic Comprehension of textual data

- Common Sense Reasoning of textual data

With these technologies in place, passing the Turing Test is easily accomplished. However, one can go a step further as the textual-based Turing Test is expanded to the Avatar level. Singularity describes the state when machine intelligence matches human intelligence encompassing all levels of

intelligence: cognitive, emotional and social. The Avatar appears as a screen-based simulation of a fictional Human who is able to sense and monitor the emotions, the physical well-being and the intellectual capacity of its human counterpart. Interacting with each other, both the Human as well as the Avatar learn to generate and comprehend knowledge. While the knowledge capacity of the Avatar is practically unlimited, the Human’s bodily awareness far exceeds the Avatar’s sensor capacity recording the Human’s physical and emotional state. Knowledge is the combination of data and information acquisition, to which expert opinion, skills, and experience are added. Learning is the way Humans absorb and generate knowledge. The same holds true for AI-Machines. However, today’s AI-Machines perform this task far less efficiently compared to the way a four-year old child learns. Progress in Neuroscience and new mathematical neural models will eventually correct this disadvantage due to the ever-increasing volume of data available to recreate, within days, millions of years of evolutionary human development.

A few years ago, translating text from one language to another by a machine produced poor results. Today you can open this essay under Google and you can choose from about 40 languages to get an instantaneous translation. The human touch is still required to correct a few misinterpretations or issues of style, but all-in-all about 95% of the text is correctly translated. The quality of the translation will improve as the machine continuously learns from previous translations. The same process of improvement will occur with the development of Avatars.

Why we need a charter for Avatar-interaction

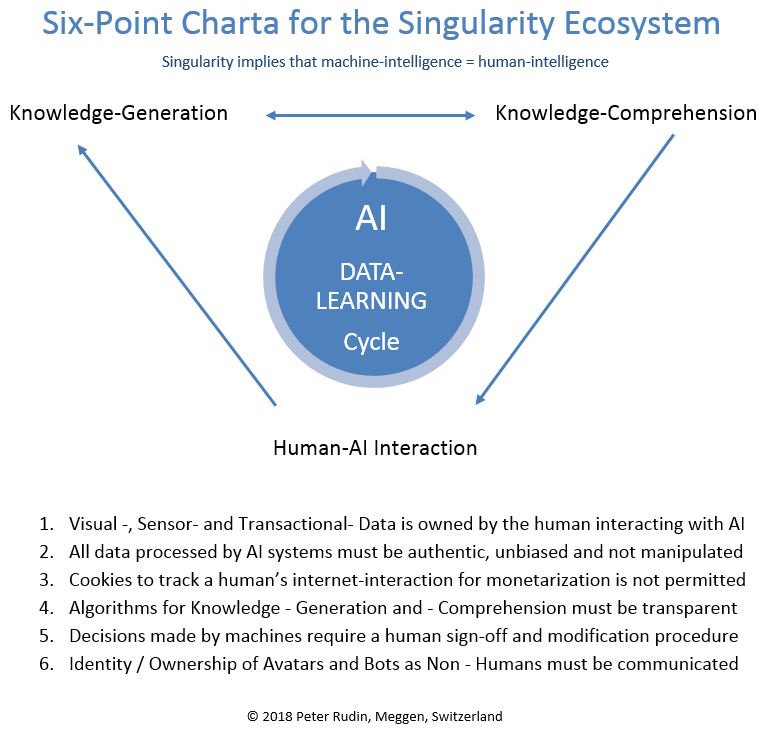

Voice assistants are potential privacy nightmares as they proceed to monitor more and more elements of our daily lives. This will increase as AI has access to more and more data to generate not just knowledge but comprehension of knowledge as well. Humanity is on the verge of creating an artificial creature which in many ways is superior to humans. As Singularity will arrive, a Charta is needed to augment and balance the unique capabilities of Humans and AI-Machines in form of an Ecosystem and to protect Humans from the potential danger of nihilation:

Discussion

There are numerous activities and initiatives in progress to regulate the potential problems caused by AI: the Toronto Declaration, AINow, the Partnership on AI, Declaration on Ethics and Data Protection in Artificial Intelligence by the IDPC, to name just a few. In May this year the EU General Data Protection Regulation (GDPR) went into effect. While these activities are focused on advancing human rights vis-à-vis the potential dangers of AI, they do not really tackle the problem that three techno giants control the market for AI services and products: Google with a 70-90% market share with Search, Amazon with a 40-50% market share in consumer product distribution and associated services, Facebook with 60-70% in Social Media. Government market regulations to prevent monopolies have failed. All these companies reinvest their profits in AI technology, competing for the best talents with high financial incentives to further strengthen their lead in AI services and products. The value Avatars can bring to the market once Singularity is reached, is enormous. The Six-Point Charta proposed is an attempt to democratize AI by a broader distribution of the value generated and to curtail the monopolistic business model of ‘Free Services in exchange for Personal Data’.

Conclusion

The Singularity Ecosystem is not a self-fulfilling prophecy, it must support the survival of the human species. Control and supervision of this process must remain under human control. As AI-Machines interact with Humans on an emotional level, creating empathy between the Machine and the Human, the intensity of this relationship has two potential outcomes: on the one side, the value of the human personality grows through better personal insight and improved consciousness, on the other side Human’s personality weakens, slowly losing its assets of creativity and critical thinking, potentially turning Humans into ‘Slaves’ of a few technology barons. Singularity is still years away, but a Charter is required now to set the stage for future prosperity and peace within our western society.