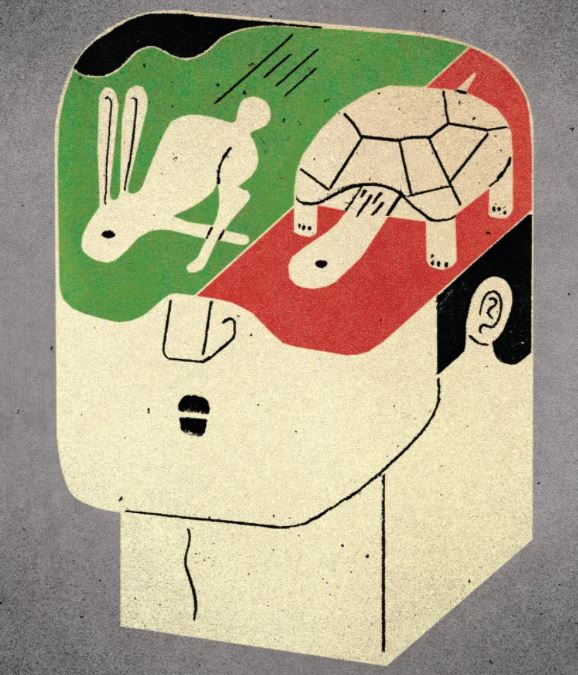

Two Brains ‘Running’ Picture Credit: nytimes.com

Two Brains ‘Running’ Picture Credit: nytimes.com

Introduction

Ten years after its first publication, Daniel Kahneman’s book ‘Thinking, Fast and Slow’ continues to top Amazon’s Bestseller list in the category ‘Strategic Management’. The phenomenal success can be attributed to the fact that the book opens the readers awareness regarding two concepts of how humans think in an intuitive way. The book further reveals many insights of human behavior under various conditions, following decades of scientific research. The growing interest of the AI community in Neuroscience, linking neural brain activity with behaviour in decision-making, offers a new positioning of Kahneman’s theory, especially as Artificial Neural Networks (ANNs) and Machine Learning (ML) continue to struggle with issues related to causality and common-sense reasoning.

Daniel Kahneman’s theory

According to Daniel Kahneman’s theory, human decisions are supported and guided by the cooperation of two different kinds of mental abilities: a thinking pattern defined as ‘system 1’, providing tools for intuitive, imprecise, fast, and often unconscious decisions (“thinking fast”) and a second thinking pattern, defined as ‘system 2’ handling more complex situations where logical and rational thinking is needed to reach a complex decision (“thinking slow”). System 1 is guided mainly by intuition rather than deliberation. It gives fast answers to quite simple questions. Such answers are sometimes wrong, mainly because of unconscious bias or because they rely on heuristics or other short cuts such as emotional impulses. However, system 1 can build mental models of the world that, although inaccurate and imprecise, can fill knowledge gaps through causal inference and allow us to respond reasonably well to the many stimuli of our everyday life. Individually we make hundreds of decisions every day without being consciously aware of doing so and we engage our system 1 for about 85% of our decision-making. When the problem is too complex for system 1, system 2 is involved with access to additional cognitive and computational resources applying sophisticated logical reasoning. While system 2 seems capable of solving harder problems than system 1, system 2 still maintains a link to system 1 and its capability of causal reasoning. The causality skills of system 1 act as support of the more complex and accurate reasoning of system 2 on problems which are cognitively more difficult to solve. With this mental capacity of switching between system 1 and system 2, humans can reason at various levels of abstraction, adapt to new environments, generalize from specific experiences to reuse their skills to solve other problems as they learn from experience how to integrate both mental models for better decision-making.

Adding Neuroscience to the equation

In chapter 4 of the Book ‘Bursting the Big Data Bubble’, published in 2014, James Howard, Professor at the Department of Business and Management at University of Maryland University College, explains why intuition, heuristics and emotional impulses have important roles in decision making. Throughout evolution, the human brain has adapted to dealing with different types of decision situations. Emotions trigger the release of chemicals, stimulating the gain-potential or loss-avoidance in neural circuits. Unmanaged, these emotions take the form of impulses that raise the probability of bypassing our cognitive system. In emergencies this may be the best course of action. For example, when speed of response is important, the amygdala region of the human brain may send a message to the cognitive system causing an automatic reaction to facilitate survival or avoidance of a threat. This emotional response in turn triggers the question how the brain can coordinate the fast thinking of Kahneman’s system 1 with the slow thinking of system 2? From neuroscientific research we know that connections between neurons and associated memories can be strengthened by deliberate learning with the consequence that when system 1 takes the lead in decision making, the probability of good decision-making is enhanced if the cognition ability of system 2 is engaged as well. In a recent study, How we make complex decisions – Neuroscience News , MIT neuroscientists explored how the brain reasons concerning probable causes of failure after going through a hierarchy of decisions. They discovered that the brain performs two computations using a distributed network of areas in the frontal cortex region of the human brain. First, the brain computes confidence over the outcome of each decision to figure out the most likely cause of a failure and second, when it is not easy to discern the cause, the brain makes additional attempts to gain more confidence. “Creating a hierarchy in one’s mind and navigating that hierarchy while reasoning about outcomes is one of the exciting frontiers of cognitive neuroscience,” says Mehrdad Jazayeri, a member of MIT’s McGovern Institute for Brain Research, and senior author of the study. The MIT team devised a behavioral task that allowed them to study how the brain processes information at multiple timescales to make decisions. The researchers used this experimental design to probe the computational principles and neural mechanisms that support hierarchical reasoning. Theory and behavioral experiments in humans suggest that reasoning about the potential causes of errors depends to large degree on the brain’s ability to measure the degree of confidence in each step of the process. This two-level reasoning approach supports Kahneman’s behavioral theory that system 1 and system 2 complement each other in decision-making scenarios. Hence, a thought-model based on duality – evolved as part of human evolution by differentiating good from bad, true from false or distinguishing between body and mind, for example – is key to developing future AI-systems for decision-making and problem-solving.

The concept of Thinking, Fast and Slow as foundation for a new generation of AI-Systems

Taking advantage of the exponential performance increase and cost-reduction in computation, one part of the AI-community is focused on building ever larger ANNs in support of new ML algorithms. Experience with GPT-3, one of the largest ANNs with billions of parameters, shows that performing reasoning tasks, such as generating natural language (NLP) does not produce consistent results. Another fraction of the AI community ( 2010.06002.pdf (arxiv.org) ), is making attempts to overcome these limitations, going from a ‘one-system’ approach as stipulated by ‘Deep-Machine-Learning (DML)’ applications to a ‘two-system’ approach, adding symbolic and logic-based AI techniques (also referred to as Classical or Symbolic AI) for handling abstraction, causal analysis, introspection and various forms of implicit and explicit knowledge in decision-making tasks. Symbolic AI relies heavily on the proposition that human’s thought uses symbols and that computers can be trained to think by processing symbols. A hybrid concept, combining DML with Symbolic AI, offers an intriguing possibility of drawing a parallel between the mental mindsets represented by Kahneman’s system 1 and system 2. In analogy to system 1, DML is used to build models from sensory data. Perceptions such as seeing and reading are addressed with DML techniques, for example for image- or voice- recognition. However, this approach lacks causality, a requirement for common sense reasoning. The ability of system 2 to solve complex problems stipulates the application of Symbolic AI technology, employing and optimizing explicit knowledge, symbols and high-level concepts. The quality of DML applications is measured by the degree to which they can achieve the desired result, e.g., accuracy, precision, or recall in image recognition. In contrast, the quality of a Symbolic AI is measured by the correctness of its conclusions based on pre-programmed rules.

A hybrid approach for the advancement of AI for improved decision-making

The combined ‘system 1 – system 2’ approach which switches dynamically between the two mindsets implicates a hybrid model for AI-supported decision-making. Humans live in societies and their individual decisions are linked to their perception of reality, which includes the world, the other agents, and themselves. These models are not perfect, but they are used to make informed decisions and provide a test bed to evaluate consequences of alternative decisions. However, such models are not based on exact knowledge, but rather on approximate information on the world and on beliefs on what others know and believe. Hence, applying a hybrid concept, AI-systems should include several independent components to be triggered when needed. This implies that the best structure for this kind of AI-system is based on a multi-agent architecture where the individual agent focuses on specific skills and problems, acts asynchronously and contributes to building models for the problem to be solved. According to Kahneman’s theory, system 1 and system 2 support each other in specific decision-making scenarios. Monitoring and recording these activities provides the experience needed for optimizing future decision-making. This correlates with the latest neurobiological findings on human behaviour and related brain-activities with multiple brain regions involved in decision-making, challenging and supporting each other for reaching the optimal answer to a problem to be solved.

Conclusion

Moving from data-driven to AI-driven processes, represents the challenge businesses must meet to remain competitive and profitable. Embracing AI in our workflows affords better processing of structured data and allows for humans to contribute in ways that are complementary. Hybrid Systems with massive cognitive bandwidth and data-processing power, combining a system 1 and system 2 mindset, complemented by human’s unique resources of intuition and self-reflection, stipulate the need for a decision-making architecture that can instantaneously adapt in handling unexpected problems. Hence, the interface between humans and machines becomes the decisive factor for successful problem-solving and decision making. Implementing such a strategy in a business context requires a mindset of emotional- and networked-intelligence throughout the organisation.