Picture Credit: Stanford University

Picture Credit: Stanford University

Introduction

The term ‘neuromorphic computing’ has a long history, dating back at least to the 1980s, when the legendary Caltech researcher Carver Mead proposed designing integrated circuits (ICs) to mimic the organization of living neuron cells. However, the one key factor that disqualifies a classical ‘von Neumann’ computer from mimicking the human brain is its deterministic architecture. The whole point of a digital computer program is to determine how the machine must function, given a specific set of inputs. The brain of a human being is not a deterministic organism. We know that biological neurons are the principal components of human memory, though we do not really know how experiences and sensory inputs map themselves to these neurons. Scientists have observed, that the functions through which neurons ‘fire’ (display peak electrical charges) are probabilistic. The probability that any one neuron will fire, given the same inputs (assuming they could be re-created), is a value of less than 100 percent, hence firing of biological neurons is non-deterministic. Neither neurologists nor AI researchers are entirely certain why a human brain can so easily learn to recognize letters when they have so many different typefaces, or to recognize words when people’s voices are so distinct. Indeed, intellect (the phenomena arising from the mind’s ability to reason) could be interpreted as a product of the brain adapting to the information it senses from the world around it, by literally building new neural connections to accommodate it. Neuromorphic computing provides new insights how the human brain functions and why it is so extremely efficient, both in terms of energy consumption and size, in performing complex cognitive tasks.

Deep-learning in artificial neural networks (ANNs)

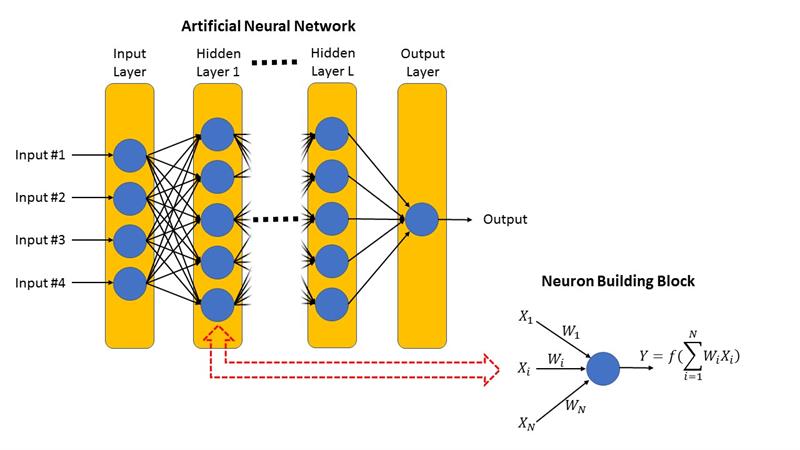

Carver Mead had envisioned implementing the structure of a biological neuron in silicon, but developers of today’s deep-learning artificial networks have abandoned that idea for a much simpler approach. Artificial neural networks are in effect software simulations running on traditional server CPUs enhanced with graphics processing units (GPUs) for acceleration. Data scientists use many different algorithms to train neural networks until they provide the correct answer to a given input. The network is just a graphic representation of a large linear algebra computation as depicted by the following graph:

Artificial neural network nodes (neuron building blocks) carry out arithmetic calculations: they multiply the numbers arriving on each of their input nodes by predetermined ‘weights’ and add up the results. The resulting sum may then be subjected to some non-linear function such as normalization, min or max setting, or whatever the network designer decides before the resulting number is sent on to the next layer in the network. Trainers must assemble huge amounts of input data and label each one. Then one by one, trainers feed an input data set into the simulation defined by the network and simultaneously input the labels classifying the input data. The software compares the output of the network with the correct answer and adjusts the weights of the final stage (output layer) to bring the output closer to the correct answer. Then the software moves back to the previous layer and repeats this process until all the weights in the network have been adjusted to yield the correct answer, a process called ‘backpropagation’. Obviously, this procedure is time- and compute-intensive and highly dependent on the quality of the data provided. Biased or erroneous data, as has been reported many times, can lead to prediction errors. Nevertheless, the fact is that the more data (‘big data’) is available to train the system, the better the capability of the network to perform a certain task.

Learning of neuromorphic systems with spiking neural networks (SNNs)

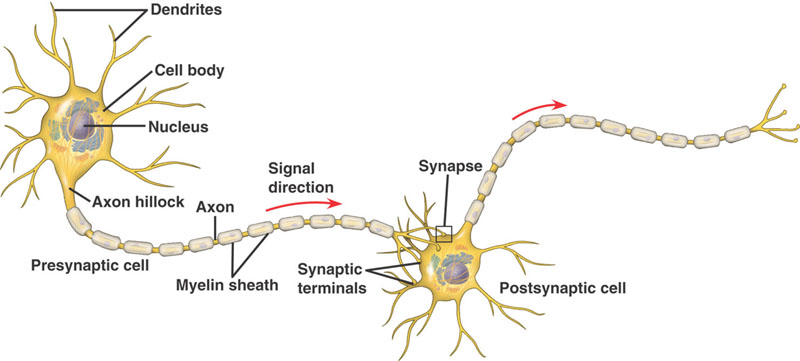

The engineering of a neuromorphic device involves the development of components whose functions are analogous to parts of the brain. Living nerve cells of the human brain have four major functional components as depicted by the following graph:

- Synapses: Electrochemical pulses enter the cell through tiny interface points called synapses.

- Dendrites: The synapses are scattered over the surfaces of tree-root-like fibres called dendrites.

- Cell Body: The Dendrites reach out into the surrounding nerve tissue, gather pulses from their synapses, and conduct the pulses back to the heart of the neuron called the cell body.

- Axon: A tree-like fibre that conducts output pulses from the cell body into the nervous tissue, ending at synapses on other cells’ dendrites.

Neuromorphic devices emulate these functional components of the human brain. Perhaps the most fundamental difference between artificial neural networks and the biological brain is how information is transmitted. The nodes in artificial neural networks communicate by continuously sending numbers across the network to perform image recognition or other cognitive tasks. In contrast, neuromorphic nodes send pulses, or sometimes strings of pulses, in which timing and frequency carry the information, hence the term ‘Spiking Neural Networks (SNNs). In the biological brain, each neuron is connected to a variety of inputs. Some inputs produce excitation in the neuron, while others inhibit it — like the positive and negative weights in an artificial neural network. But with a SNN, upon reaching a certain threshold state described by a variable (or perhaps with a function), the neuron spikes. The purpose of an SNN model is to draw inferences from these spikes to see, for example, if an image or a data pattern triggers a memory cell.

The topologies of ANNs and SNNs differ significantly. Conventional deep-learning networks comprise strictly cascaded layers of computing nodes. The outputs from one layer of nodes can only connect to selected inputs of the next layer. There are no such restrictions within the topology of SNNs. As in real nervous tissue, a neuromorphic node may get inputs from any other node, and its axon may extend it to any other node.

The learning process of the human brain is not yet fully understood; however, research has identified two separate aspects to learning: First, real nerve cells are able to reach out and establish new connections, in effect rewiring the biological network as they learn. They also have a wide variety of functions available. So, learning can involve both changing connections and changing functions. Second, biological neural systems learn very quickly. Humans can learn to recognize a new face or a new abstract symbol, with one or two instances. In contrast artificial convolutional deep-learning networks might require tens of thousands of training examples to master a new task.

Neuromorphic Computing Applications

In the long-term human-level artificial cognitive intelligence will depend on the emergence of understanding information processing in the biological brain based on neuromorphic concepts. As a result of the ongoing progress in neuromorphic research, application specific systems are reaching the market, typically as intelligent real-time sensors where low power consumption and/or mobility offer significant advantages over ‘power-hungry’ traditional ‘von Neumann’ computer-systems. Neuromorphic systems only require energy when a spike of a neuron signals a change in the observed domain. This makes them by an order of magnitude more energy efficient in comparison to conventional ANN systems. Smart sensors exploiting the ongoing progress in sensor technology can provide local intelligence independent of cloud services. Event driven, real-time neuromorphic processors are well suited for ‘always-on’ IoT devices and edge-computing applications like gesture recognition, face or object detection, location tracking and vision sensing. aiCTX, a spin-off from the ETH Zurich, partially financed with seed money from Baidu Ventures, is one of the first companies to offer neuromorphic system solutions for application areas such as:

- Autonomous Navigation: Processing 3D data in real-time. The low latency of the neuromorphic processor allows to exploit the various advantages of fast solid-state Lidar (laser detection and ranging) for event detection and control of autonomous vehicle navigation.

- Wearable Devices for Health Monitoring: ultra-low power (sub mW) sensory processing and instantaneous detection of anomalies, through continuous monitoring of body signals, like ECG, EMG and EEG from wearable devices.

- Industrial Monitoring: Unique neuromorphic technology allows ‘always-on’ vibration monitoring for industrial machines with several months of battery life. This enables fault detection in industrial machines well in advance.

Another player in this rapidly growing market is BrainChip. The company has announced that they will mass produce a neuromorphic chip to be available sometime in 2019 at a sales price of somewhere between 10 to 15 USD per chip.

Conclusion

Neuromorphic systems offer two major promises. First, because they are pulse-driven, potentially asynchronous, and highly parallel, they could be a gateway to an entirely new way of computing at high performance and very low energy. Second, they could be the best vehicle to support unsupervised learning—a goal that may prove necessary for key applications such as autonomous vehicle navigation in unchartered areas or natural-language comprehension. What neuromorphic chips can do is to provide self-learning without requiring large datasets as in convolutional artificial networks. Therefore, with neuromorphic chips the system learns along the way, much like how humans learn. This is unlike the brute force deep-learning procedure which focuses on interpreting large and varied sets of data in order to train a conventional computer to make predictions. In theory, the type of applications these self-learning systems are able to handle might lead to neuromorphic artificial agents that can express cognitive behaviour by sensing signals in real-time and adapting to unexpected changes in uncontrolled environments while coping with uncertainty. A new generation of personal assistants might emerge, augmenting humans in learning tasks, a development with far-reaching consequences for our economic and social environment.

Hello Peter,

outstanding great series of excellent researched and written Essays (quality, topic, granularity,…),

Many thanks for providing these insights.

greetings Hannes