Computational Neuroscience / Picture Credit: fairobserver.com

Computational Neuroscience / Picture Credit: fairobserver.com

Introduction

While very efficient at processing vast amounts of data, current AI models lack the generalization abilities of human intelligence, making them vulnerable to a vast range of possible unexpected situations an AI system could be faced with. Unfortunately, current approaches to AI try to solve these problems by throwing more data and computing capacity at the problem. The obsession with creating bigger datasets and bigger neural networks has side-lined some of the important questions and areas of research regarding AI. Some of these topics include causality, reasoning, common sense, learning from few examples and other fundamental elements that today’s AI technology lacks. There is only so much to be achieved with statistics and pattern matching. AI systems might have solved 90 percent of solving human language problems, for example. But that last 10 percent, dealing with the implicit subtleties and hidden meanings of language, remain unsolved. While neural machine translation can be impressively effective and useful in many applications, the translations, without post-editing by knowledgeable humans, are still fundamentally unreliable. We need new models to advance AI, getting out of an overhyped dead-end street.

‘Dumb’ Neurons as a limiting factor of modelling Deep Neural Networks (DNN’s)

In the 1950s the concept of a ‘dumb’ neuron began to dominate neuroscience where the neuron acted as a simple integrator, a point in a network that merely summed up its inputs. Extensions of the neuron, called dendrites, would receive thousands of signals from neighbouring neurons — some excitatory, some inhibitory. In the body of the neuron all these signals would be weighted and tallied, and if the total exceeded some threshold, the neuron fired a series of electrical pulses which directed the stimulation of adjacent neurons. At around the same time, researchers realized that a single neuron could also function as a logic gate, akin to those in digital circuits. A neuron was effectively an AND gate, firing only after receiving a sufficient number of inputs. Still, this model of the neuron was limited. Not only were its guiding computational metaphors simplistic, but for decades, scientists lacked the experimental tools to record the signals from the various components of a single neuron. “Essentially the neuron was being collapsed into a point in space,” said Bartlett Mel, a computational neuroscientist at the University of Southern California. “It did not have any internal articulation of activity.” The model ignored the fact that the thousands of inputs flowing into a given neuron landed in different locations along its various dendrites. It ignored the idea that individual dendrites might function differently from one another. And it ignored the possibility that computations might be performed by other internal structures of the neuron. The information-processing capabilities of the brain are often reported to reside in the trillions of connections that wire its neurons together. But over the past few decades, mounting research has quietly shifted some of the attention to individual neurons, which seem to shoulder much more computational potential than once seemed imaginable.

Discovering the computational power of Dendrites within Neurons

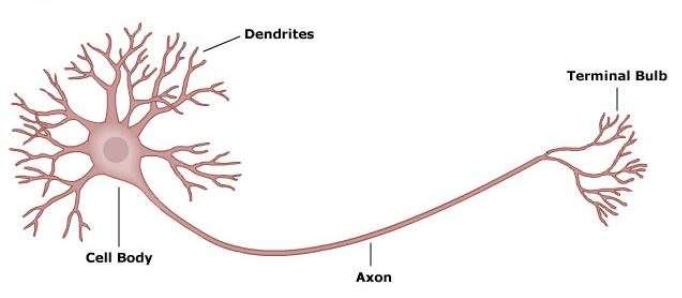

In the late 1980s, modelling work by the neuroscientist Christof Koch and others showed that single neurons did not express a single or uniform voltage signal. Instead, voltage signals decreased as they moved along the dendrites into the body of the neuron, and often contributed nothing to the neuron’s ultimate output. This compartmentalization of signals meant that separate dendrites could be processing information independently of one another. This was at odds with the point-neuron hypothesis, in which a neuron simply added everything up regardless of location. The following schema defines the components of a single-cell neuron:

Creating new neural models, Koch and other neuroscientists, including Gordon Shepherd at the Yale School of Medicine, demonstrated how the structure of dendrites could in principle allow neurons to act not as simple logic gates, but as complex, multi-unit processing systems. They simulated how dendritic trees could host numerous logic operations, through a series of complex hypothetical mechanisms. They found that dendrites had their own nonlinear input-output curves and had their own activation thresholds, distinct from those of the entire neuron. The dendrites would serve as nonlinear computing sub-units, collecting inputs and spitting out intermediate outputs. Those signals would be combined in the cell body of the neuron, which would then determine how the neuron would respond. Whether the activity at the dendritic level influenced the neuron’s firing and the activity of neighbouring neurons was still unclear. But regardless, that local processing might prepare or condition the system to respond differently to future inputs or help wire it in new ways, according to Shepherd. In theory, almost any imaginable computation might be performed by one neuron with enough dendrites, each capable of performing its own nonlinear operation.

New research suggests that Dendrites could perform complex computations

Due to new evidence coming from the discovery of a new type of electrical signal in the upper layers of the human cortex, it seems that individual dendritic compartments can also perform computations that mathematical theorists had previously categorized as unsolvable by single-neuron systems. “I believe that we’re just scratching the surface of what these neurons are really doing,” said Albert Gidon, a postdoctoral fellow at Humboldt University of Berlin and the first author of the paper that presented these findings in the journal ‘Science’ this month. To figure out what the new kind of spiking might be doing, the scientists created a model to reflect the neurons’ behaviour. The model found that the dendrite spiked in response to two separate inputs — but failed to do so when those inputs were combined. This was equivalent to a nonlinear computation known as exclusive OR (or XOR), which yields a binary output of 1 if one (but only one) of the inputs is 1, a result which so far was considered impossible to be achieved by the ‘dumb-neuron’ concept. These new results will definitely impact the future direction of machine learning and AI. Artificial neural networks rely on the point model, treating neurons as nodes that tally inputs and pass the sum through an activity function. “Very few people have taken seriously the notion that a single neuron could be a complex computational device,” said Gary Marcus, a cognitive scientist at New York University and an outspoken sceptic of some claims made for deep learning:

“The whole idea — to get smart cognition out of dumb neurons — might be wrong.”

Yoshua Bengio’s proposal: Move from System 1 to System 2

In last year’s Conference on Neural Information Processing Systems (NeurIPS 2019), Yoshua Bengio, one of the three pioneers of deep learning and Turing Award winner, delivered a keynote speech that shed light on possible directions that can bring us closer to human-level AI. He is one of many scientists who are trying to move the field of artificial intelligence beyond predictions and pattern-matching toward machines that think like humans. Titled, ‘From System 1 Deep Learning to System 2 Deep Learning,’ Bengio’s presentation draws on research he and others have performed in recent years. “Some people think it might be enough to take what we have and just grow the size of the dataset, the model sizes, computer speed—to just get a bigger brain,” Bengio said in his opening remarks. This mindset has created a sort of ‘bigger is better’ mentality, pushing some AI researchers to seek improvements and breakthroughs by creating larger and larger AI models and datasets. While, arguably, size is a factor and we still don’t have any neural network that matches the human brain’s 100-billion-neuron structure, current AI systems suffer from flaws that will not be fixed by making them bigger. Current deep learning systems “make stupid mistakes” and are “not very robust to changes in distribution,” Bengio remarked. But despite their limits, they have created many useful ‘System 1’ applications, especially in the domain of computer vision. AI algorithms now perform tasks like image classification, object detection and facial recognition with accuracy that often exceeds that of humans. But there are limits to how well ‘System 1’ works, even in areas where deep learning has made substantial progress.

Changing from a ‘System 1’ to a ‘System 2’ mindset, Bengio described the following scenario: Imagine driving in a familiar neighbourhood. You can usually navigate the area subconsciously, using visual cues that you’ve seen hundreds of times. You don’t need to follow directions. You might even carry out a conversation with other passengers without focusing too much on your driving. But when you move to a new area, where you don’t know the streets and the sights are new, you must focus more on the street signs, use maps and get help from other indicators to find your destination. The latter scenario is where your ‘System 2’ cognition comes into play. It helps humans generalize previously gained knowledge and experience to new settings. “You are generalizing information in a more powerful way and you are doing it in a conscious way that you can explain,” Bengio said.

It is widely accepted that the lack of causality is one of the major shortcomings of current machine learning systems, centred around finding and matching patterns in data. Bengio believes that future deep learning systems that can compose and manipulate these named objects and semantic variables will help in moving us toward AI systems with causal structures, simulating human-level AI.

Conclusion

It seems obvious that neuroscience and its ongoing research of single neurons, combined with new algorithms developed by the AI research community, provides a realistic path towards human-like AI. Getting there requires some fundamental breakthroughs, largely driven by computational neuroscience. As brain-technology improves, observing and associating single neurons vis-à-vis human behaviour should provide new insights into the scope and functionality of intelligence.