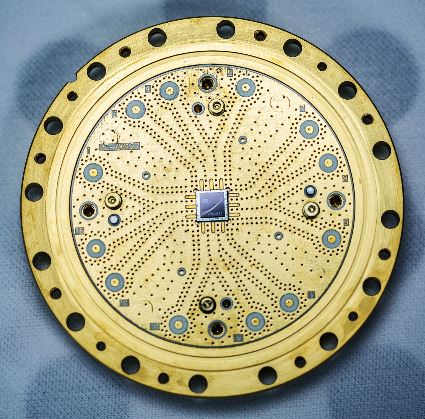

An 8-qubit quantum processor built by Rigetti Computing.

An 8-qubit quantum processor built by Rigetti Computing.

Introduction

To advance AI and Deep-learning further, three issues need to be addressed. They relate to the application of software as well as hardware:

Time: It can take weeks to train deep-learning networks. This does not take into account the weeks or months needed to define the problem and to program the networks before they reach the required performance level.

Cost: Computer time on hundreds of Graphic Processing Units (GPUs) is expensive. Renting 800 GPUs from Amazon’s cloud computing service for just a week costs around $100,000. This does not include the manpower costs required to carry-out the AI project, engaging high-salaried individuals for weeks or months.

Data: In many cases the lack of labelled data in enough quantity simply makes it impossible to carry out the project. Good ideas remain unexplored as it becomes clear that the training data is not going to be available at an affordable price.

Major software efforts are under way to reduce the data-labelling bottleneck. The next wave of progress will come from Generative Adversarial Nets (GANs) and Reinforcement Learning, possibly with some support from Question Answering Machines (QAMs) as provided by IBM’s Watson. However, to handle the raising complexity of these networks requires increased hardware performance. A three-way race for future AI-applications, based on completely different hardware-technologies can be identified as follows:

- High-Performance Computing (HPC)

- Neuromorphic Computing (NC)

- Quantum Computing (QC)

While HPC remains the major commercial hardware-component for AI-Applications for the next few years, both NC and QC are gradually moving from a research- to an industrial production-stage, with first applications challenging the current dominance of HPC. Moreover, increased government funding is accelerating this process as the competition for global leadership in AI intensifies.

High-Performance Computing

The growing application of Deep-Neural Networks has created a ‘win-win’ situation for both, the AI application providers as well as the chip manufacturers. A key to today’s business model, also referred to as ‘open-source’, is the availability of a cloud-platform for the development and storage of AI-applications. Since 2016, every major player in AI has made it’s AI-platforms ‘open-source’, investing heavily in new data centres. While Intel, Nvidia, and other traditional chip makers are rushing to capitalize on the new demand for GPUs (Graphical Processing Units), others like Google and Microsoft are exploring completely new frontiers by developing their own proprietary chip-sets in order to make their deep-learning platforms more attractive. Currently Google seems to lead the innovation-race, combining TensorFlow as its powerful, general purpose AI software-tool with the proprietary TPU (Tensor Processing Unit) chip-set.

Deep-learning has benefited greatly from these accelerated computing devices and has received significant support from device manufacturers in the form of deep-learning specific libraries. General purpose GPUs and proprietary chipsets as implemented by Google are the basic building blocks of today’s HPC platforms. However, the silicon-based production of chips is finally reaching its limits. One problem is the heat that is generated when more and more silicon circuitry is jammed into the same small area. Top-of-the-line microprocessors currently have circuit features that are around 14 nanometers across which is smaller than the dimensions of most viruses. Reducing this further, for example to 3 nanometers, is likely to introduce quantum uncertainties, making the chips unreliable. To the chip makers the end of Moore’s law is not just a technical issue; it is also an economic one. Some companies, notably Intel, are still trying to shrink the chips but every time the scale is halved, manufacturers need a whole new generation of ever more precise photolithography machines. Building a new production line today requires an investment typically measured in billions of US Dollars — something only a handful of companies can afford. In summary, it is foreseeable that the scaling effects which have driven the production of computer hardware applying the Von-Neumann architecture over many years, will come to an end within the next two decades.

Neuromorphic Computing

The human brain is extremely complex, comprising 100 billion brain cells with trillions of synapsis connecting the cells. We understand how individual neurons and their components behave and communicate with each other, and on the larger scale, which areas of the brain are used for sensory perception, action and cognition. However, we know less about the translation of neural activity into behavior, such as turning thought into muscle movement.

Neuromorphic or Spiking Neural Networks (SNNs) are based on observations about the way how brains work. They differ significantly from the way AI deep-learning neural networks (NNs) are used. Real neurons send selective signals with data encoded in a way not yet understood. Research is under way to find out whether the data is encoded in the amplitude, the frequency, or the latency between spikes.

Neuromorphic computing architectures have historically been developed with one of two goals in mind: either developing custom hardware devices to accurately simulate biological neural systems with the goal of studying biological brains – or building computationally useful architectures that are inspired by the operation of biological brains and have some of their characteristics. Analogue to the human brain which consumes about 20W of energy, the power requirements of neuromorphic chips are magnitudes below the power requirements of conventional GPU’s. In developing neuromorphic devices for computational purposes based on SNN’s, IBM’s TrueNorth chip – announced in 2014 – was the world’s first fully digital deterministic neuromorphic chip-set. After several iterations, TrueNorth has now 4,096 neurosynaptic cores with a total of 1 million neurons and 256 million synapses which is about equivalent to the brain capacity of a bee.

One of the two largest scientific projects ever funded by the European Union, the one billion EUR Human Brain Project (HBP) aims to develop an ICT-based scientific research infrastructure to advance neuroscience, computing and brain-related medicine. Started in 2013 with the project to end in 2023, the HBP has brought together scientists from over 100 universities, helping expand our understanding of the brain and spawning somewhat similar initiatives across the globe, including BRAIN in the US and the China Brain Project. HBP’s Neuromorphic Computing Platform consists of two research programs – SpiNNaker and BrainScaleS. Within the last two years a 48-chip board has been developed to be the building block for SpiNNaker machines. The goal is to be able to simulate a single network consisting of one billion neurons, requiring a machine with over 50,000 chips installed.

In early 2018, Intel unveiled Loihi, its first publicly announced neuromorphic computing architecture. “We’ve implemented a programmable feature set which is faithful to neuromorphic architectural principles. Data is distributed in a very fine-grained parallel way which means that we believe Loihi is going to be very efficient for scaling and performance compared to the Von-Neumann architecture applied in conventional systems.”

IBM’s True North and Intel’s Loihi are a few years away from becoming viable neuromorphic standard hardware products. Research efforts with platforms like SpiNNaker are more focused on modelling neurons than moving neuromorphic computing to the AI chip front lines. However, adding momentum to new hardware-technology, BrainChip Holdings, a California Start-up, is bringing its Akida Neuromorphic System-on-Chip (NSoC) to market in Q3, 2019, competing head-on with Intel and IBM. Designed for applications such as advanced driver assistance systems (ADAS), autonomous vehicles, drones and vision-guided robotics, NSoC’s aim to bridge the gap between neuroscience and machine learning. According to BrainChip each NSoC has 1.2 million neurons and 10 billion synapses at an anticipated price-tag of USD 10.00 per chip, significantly below TruthNorth’s or Loihi’s current pricing levels. The Akida NSoC includes sensor interfaces for traditional pixel-based imaging, dynamic vision sensors (DVS), Lidar, audio and analogue signals with high-speed data interfaces such as PCI-Express, USB and Ethernet.

Quantum Computing

On October 29, 2018 the European Union jumped into the increasingly globalized race for quantum computing technology with the launch of a one billion EUR Quantum Flagship project intended “to place Europe at the forefront of Quantum innovation.” In September, the U.S. House passed a USD 1.3 billion national quantum initiative which the Senate approved in December. China had embarked on a national program even earlier, including a reported planned investment of USD 10 billion into a national quantum research centre. Like the U.S. quantum initiative, the EU Quantum Flagship is a ten-year project. It will involve more the 5000 European researchers. Twenty projects of the initial 140 submitted will be tackled during Quantum Flagship’s early stages. The initiative identifies five main areas of study:

- Quantum Communication

- Quantum Computing

- Quantum Simulation

- Quantum Metrology and Sensing

- Basic Science

Several commercial players – e.g. IBM, Google, Microsoft, Rigetti and D-Wave – are busily pursuing their own paths and there are now three “quantum-cloud” offerings in various stages of roll-out available to quantum researchers. At the Consumer Electronics Show in Las Vegas in January 2019, IBM unveiled a new quantum computer that is more reliable than its previous experimental prototypes, bringing the company a step closer to commercialization of this technology. The machine, which IBM claims to be the first integrated, general-purpose quantum computer, has been named ‘Q System One.’ IBM previously announced a 50-qubit prototype. The more stable 20-qubit ‘Q System One’ is an effort to improve on the existing design, moving all the aspects of the machine into a single air-tight box. The systems will be available through IBM’s existing Quantum Network cloud-based subscription service with focus on the following application areas:

- Chemistry: Material design, oil and gas, drug research

- Artificial Intelligence: Image Classification, machine learning, linear algebra

- Financial Services: Asset pricing, risk analysis, rare event simulation

Late 2018 Professor Michael Hartmann from the Heriot-Watt’s Institute of Photonics and Quantum Sciences in Edinburgh, announced the Neuromorphic Quantum Computing (Quromorphic) Project to build a system that transfers data in the same way neurons work in the human brain. The project is supported by several European universities and research organisations and partially funded by the EU. This ambitious project plans to develop a new generation of small quantum computers capable of making their own decisions. New quantum devices made of superconducting electrical circuits will combine input signals to generate output or not, similar to the spiking of real neurons in the brain, mimicking the human decision-making process.

Conclusion

High-performance computing will likely continue to improve in performance over the next several years as new types of chips and memory architectures are introduced to overcome the performance-bottleneck of the Von-Neumann architecture, shuffling data back-and-forth between the central processing unit (CPU) and memory.

However, Neuromorphic and Quantum processors are laying out competitive roadmaps to advance AI to new levels of functionality and efficiency. Neuromorphic Spiking Neural Nets (SNNs) promise to be powerful self-learners working with smaller, unlabelled training sets and the ability to transfer knowledge from one domain to the next. Quantum computers will help to eliminate the time and cost barrier to handle tasks which so far are out of reach of high-performance computing, thereby further advancing science into new territories. However, prior to getting there, many social, ethical and economic issues which we are facing today need to be resolved.

Hi Peter

The Link is worthwile reading, thank you for the well made Synopsis, thank you jpr

Hello Peter,

I’m reading with great interest your excellent essay series, this one with a comprehensive description of tech-method trends.

The short-term practical progress continues too and will likely have a similar catalyst effect as future commercial Quantum tech (the actual ongoing changes will reshape many things and then also provide a revised base for Quantum tech).

In addition some decisions will be required to opt for using actual/past data sets (which contain besides the good stuff, also the incompetent often repeated errors and mess of humans) or define/project some positive future oriented models and data sets for deep learning (‘how to get there’).

Probably some alarm bells went off in US companies when Huawei announced last year the 1st 7 nm processor (mobile Kirin 980).

Then since 2 month’s sold in large quantity to the public via mobile phones (has few build in native AI enablers, algs).

The consolidated sum of these xxx million devices, then also connected via cloud based AI (e.g. google assist/home) and social platforms.

Works, is powered by all-any mobile-brand and phone owner and does represent an immense computing and communication scale with excessive redundancy.

After the last US election manipulation (top down) we see now in France how effective the social medias and the high

powered mobile devices/com-infra is used (Gilete Jaune) bottom-up (grassroots). Most news are streamed via mobile phones (‘by anyone’).

Google just posted a white paper https://ai.google/static/documents/perspectives-on-issues-in-ai-governance.pdf

(rather soft).

Sure ; ) , also after reading it I further confirm, your essay series does provide the most comprehensive, best information, insight and conclusions.

thank you for the essays and best greetings

Hannes