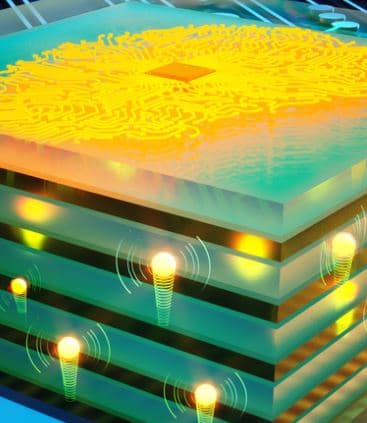

A new area of artificial intelligence called analog deep learning promises faster computation with a fraction of the energy usage. Programmable resistors are the key building blocks in analog deep learning, just like transistors are the core elements for digital processors.

A multidisciplinary team of MIT researchers utilized a practical inorganic material in the fabrication process that enables their devices to run about 1 million times faster than the synapses in the human brain.

“Once you have an analog processor, you will no longer be training networks everyone else is working on. You will be training networks with unprecedented complexities that no one else can afford to, and therefore outperform them all”.

MORE