King John signing the Magna Charta 1215 Picture Credit: Public Domain

King John signing the Magna Charta 1215 Picture Credit: Public Domain

Introduction

One of the founding fathers of AI, Marvin Minsky, was once questioned about machine-emotions when said: “The question is not whether intelligent machines can have any emotions, but whether machines can be intelligent without any emotions”. Indeed, without emotions we would not have survived as a species and our intelligence has improved as a result of our emotions. Furthermore, we cannot detach our emotions from the way in which we apply our intelligence. For example, a physician may decide on medical grounds that the best treatment option for a very elderly hospital patient would be a surgical procedure. However, the physician’s emotional empathy with the patient might override this view. Taking the age of the patient into account, he or she may decide that the emotional stress likely to be incurred by the patient is not worth the risk of the operation – and therefore, rule it out. Emotional intelligence, as well as technical knowledge, is used to decide the treatment options. Of course, machines could never feel emotions akin to us humans. Nevertheless, they could simulate emotions that enable them to interact with humans in more appropriate ways.

Ray Kurzweil explains in his book called “How to Create a Mind”, that in theory any neural process can be reproduced digitally in a computer. For example, sensory feelings like heat, feeling hot or cold, could be simulated from the environment if the machine is equipped with the appropriate sensors. However, it does not always make sense to try to replicate everything a human being feels in a machine. For example, some physiological feelings like hunger, and tiredness, are feelings that alert us of the state of our body and are normally triggered by hormones and our digestive system.

Computational models for the processing of emotions in Humanoids and Avatars

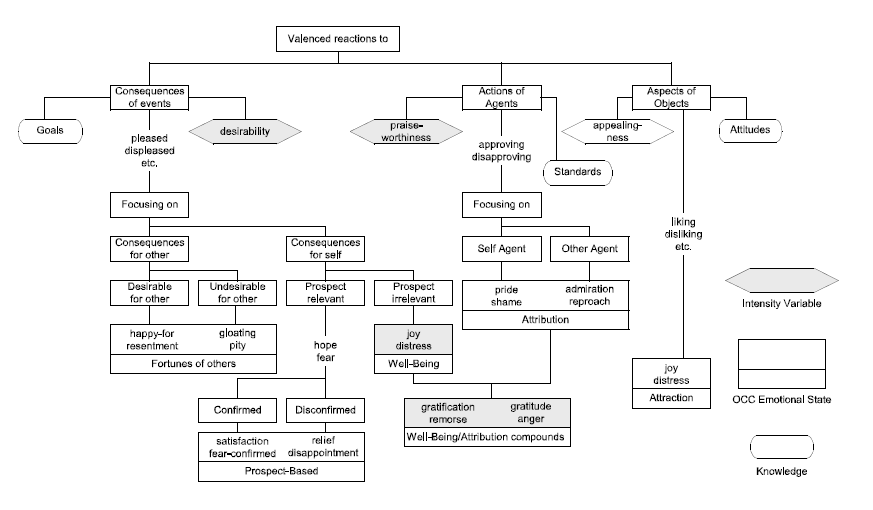

Emotions play a central role in most forms of natural human interaction, so we can expect computational methods for the processing and expression of emotions to play a growing role in human-computer interaction. Human-like avatars, designed as screen-based interactive assistants or humanoid robots designed as 3-D replicas of humans, require an emotional ‘engine’ to communicate with real humans. Several emotion models are available. Ortony, Clore and Collins have developed a computational emotion-model, that is often referred to as the OCC model and which has established itself as the standard model for emotion analysis (See Fig.1). Many developers of avatars or humanoids believe that the OCC model will be all they ever need to equip their characters with emotions. The model specifies 22 categories of emotion which represent reactions to the consequences of events, reactions to the behaviour of others or the reaction to objects. The model also offers a structure for these variables, such as likelihood of an event or the familiarity of an object, which determines the intensity of the emotion types. It contains a level of complexity and detail to cover most situations an emotional artificial character (Avatar or Humanoid) might have to deal with.

Fig. 1: OCC model of emotion

The process that artificial characters follow – from the initial categorization of an event to the resulting behavior – can be split into four phases:

- Categorization – In the categorization phase the character evaluates an event, action or object, resulting in information on what emotional categories are affected.

- Quantification – In the quantification phase, the character calculates the intensities of the affected emotional categories.

- Interaction – The classification and quantification define the emotional value of a certain event, action or object. This emotional value will interact with the current emotional categories of the character.

- Mapping – The OCC model distinguishes 22 emotional categories. These need to be mapped to a possibly lower number of different emotional expressions.

During the development process some missing features of the model might become apparent. These missing features and the context in which emotions arise are often underestimated and have the potential to turn the artificial character into an unconvincing clown-like character.

Current research about social interaction with Avatars and Humanoids

The human likeness, naturalness of movement and emotions expressed and evoked by avatars and humanoids are important factors influencing their perception. These and other social factors become particularly relevant when screen-based avatars interact with humans. In an experiment conducted by the researchers McDonnell et al., participants were asked to tell if an avatar was lying or telling the truth. There were three avatars, each rendered differently (cartoon, semi-realistic, highly-realistic) combined with an audio track. The authors expected that the more unappealing characters would bias the participants toward thinking that they lie more. No such effects were found. The lie ratings and the bias toward believing them were similar for the different renderings and the videos of real humans. However, participants may have extracted most of their information from the audio track that was identical across all stimuli.

The researchers Lucas et al. take human-virtual character interaction to another level by suggesting that in some particular cases of social interactions, virtual characters might be more successful than real humans. They showed that when disclosing health information, participants were more willing to disclose information to an avatar than to a human. Conversing with the avatar, participants had less hesitancy and showed their emotions more openly.

Obviously, these interactions are not the only factors influencing how humans perceive artificial agents. Humanoids and avatars can be programmed to display distinct personalities, and these personalities influence whether they are liked or not. For example, the researchers Elkins and Derrick showed that a virtual interviewer is trusted more when the pitch of the voice is lower during the start of the interview and if the virtual interviewer smiles at the beginning. However, how a virtual characters’ personality is perceived depends largely on context and the personality of the perceiver. When an avatar takes on the role of a healthcare assistant, it should have a different personality from that when it works as a security guard.

When designing human-like characters to investigate human cognition in neuroimaging research, evidence so far indicates that, contradictory to the predictions of the uncanny valley hypothesis, not the most realistic looking virtual characters evoke an eerie feeling, but rather those on the border between non-human and human categories, especially if they are combined with human-like motion.

Should Humans become Cyborgs?

If the singularity were to happen in a way that truly takes into account human emotion, it must

transcend the silicon world. It would have to be part organic and part machine. Perhaps this is

the only way that the singularity could actually happen – not as a computerized event that takes place somewhere in the depths of a machine, but in each of us as technologically enhanced bodies. The singularity, if it were to completely account for the full range of human experience, would of necessity retain the humanity inherent in our bodies. Perhaps this is already happening, as some have argued that we are not becoming cyborgs; we are already cyborgs.

Graham et al. argue that “technologies are not so much an extension or appendage to the human body, but are incorporated, assimilated into its very structures. The contours of human bodies are redrawn: they no longer end at the skin.” Because we have been integrating technology into our bodies for many years now, the question of how to define our humanity as we move forward has been called into question. “Are we genes, bodies, brains, minds, experiences, memories, or souls? How many of these can or must change before we lose our identity and become someone or something else?” Rather, it is our capacity for emotion, which is an intrinsic part of our embodiment. Without emotions, there is no humanity to retain and without the body, there are no emotions.

Conclusion

AI has emerged thanks largely to advances in neural networks modelled loosely on the human brain. AI can find patterns in massive unstructured data sets, improve performance as more data becomes available, identify objects quickly and accurately and make ever more and better recommendations in decision-making. As human emotions are becoming subject to the immense learning capacity of AI, providing screen-based avatars to emotionally converse with humans, we are entering a danger-zone which clearly requires a rebooting of human rights and the application of ethics in the design of human-like conversational systems. Augmenting an AI focused on mathematics and statistics with an AI focused on emotion sets the stage for unprecedented power exercised by a few tech conglomerates. The “Magna Carta”, signed in 1215, which in Latin stands for “the Great Charter of the Liberties”, was an agreement to settle issues of a power-struggle between an unpopular king and a group of rebel barons. It also was an important building block for the Enlightenment and Western Democracy. It is the balance between the ever-increasing power of the new potentate – the intelligent machine – and the role of human beings that needs to be defined and regulated. We have the legal framework defining the rights and responsibilities among humans. Now we need a similar framework that defines the rights and responsibilities between humans and intelligent machines to reap the huge potential benefits AI can provide.

just the very last sentence: not only to reap the huge potential benefits AI can provide,

BUT: to prevent the huge potential dangers and threats that AI can provide and execute……