First Industrial Revolution: Opening of the Liverpool and Manchester Railway in 1830

Introduction

Equipped with infrared and 3D sensors at its front-facing camera, Apple’s new iPhone X provides face recognition for access control. The face scan and unlock system are almost instantaneous and require no buttons to be pressed, being always “on” and ready to read your face. Millions of users depend on face recognition to check their email, send a text, or make a call; it is quick and easy. Face recognition is also used to examine, investigate, and monitor people. In China, police use face recognition to identify and publicly shame people for the crime of jaywalking. In Russia, face recognition has been used to identify anti-corruption protesters, exposing them to intimidation or worse. In the United States, more than half of all American adults are in a face recognition database that can be used for criminal investigations, simply because they have a driver’s license. Governments are not the only users of face recognition. Retailers use the technology in their stores to identify suspected shoplifters and social media platforms increasingly integrate face recognition into their user experience.

Face recognition and sensing technology is also changing the future of employer recruiting with more companies turning to artificial intelligence (AI) to improve the hiring process. Unilever, for example, has partnered with human-resource service provider HireVue. Candidates who have passed an IQ test take a HireVue video interview, using a computer or smart device equipped with a camera. The company’s software platform uses AI to screen the interviews and to narrow them down to a small pool of candidates based on their speech, facial expressions and body language.

The Rise of Emotionally Intelligent AI

Affectiva is one of the leading companies in emotion/sentiment analysis. Its emotion AI technology is already in use by 1,400 brands worldwide, many of whom use it to judge the emotional effect of adverts by asking viewers to switch on their cameras, recording their own facial reaction while a video is played. The facial images are analyzed with deep learning algorithms which accurately classify them according to the feelings of the viewer. To carry out this task Affectiva has analyzed and classified millions of photos with various facial expressions. In a recent interview co-founder and CEO Rana el Kaliouby talked about her company’s work to develop what she calls “multi-modal emotion AI”. Currently a great deal of valuable data which communicates our emotional state is lost if machines cannot read our expressions, gestures, speech patterns, tone of voice or body language.

“There’s research showing that if you’re smiling and waving or shrugging your shoulders, that is 55% of the value of what you’re saying – and then another 38% is in your tone of voice. Only seven per cent is in the actual choice of words you are saying. So, if you consider the existing sentiment-analysis market which looks at keywords or specific words being used on Twitter with no video applied, you are only capturing 7% of how humans communicate emotion, and the rest is basically lost in cyberspace.”

Sensing technology and emotion recognition software is continuously improving. Besides its ability to track basic facial expressions for emotions such as sadness, happiness, anger, surprise, etc., emotion recognition software can also capture what experts call “micro-expressions” or subtle body language cues that may betray an individual’s feelings without his/her knowledge. Micro-expressions – involuntary, fleeting facial movements that reveal true emotions – hold valuable information for

scenarios ranging from job interviews and interrogations to media analysis. Typically, micro-expressions are indicative of an emotion that people are trying to conceal. They occur on various regions of the face, last only a fraction of a second, and are universal across cultures. In contrast to macro-expressions like big smiles and frowns, micro-expressions are extremely subtle and nearly impossible to suppress or fake. Scientists from the University of Oulu in Finland have built and tested a machine capable of spotting and recognizing micro-expressions in real-time with a camera recording at 100 frames per second. To get the technology to work, the team used a single frame that shows the subject’s face, which was then compared to other frames to show whether the expression changed. According to the team’s initial tests, the technology produced better results as compared to human observation with no video involved.

The Relationship between Emotions and Feelings

Although the two words are used interchangeably, there are distinct differences between feelings and emotions. Antonio Damasio, a professor of neuroscience, psychology and philosophy, at the University of California and author of several books on the subject, explains it as follows:

Feelings are mental experiences of body states, which arise as the brain interprets emotions, which themselves are physical states arising from the body’s responses to external stimuli.

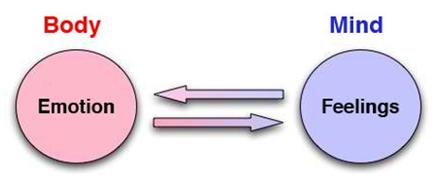

The following provides a graphic interpretation and a more detailed explanation of Damasio’s statement. Emotions are experienced by the body and feelings are experienced by the mind. Both interact with each other based on external stimuli to the body or internal stimuli from the mind. Emotions are converted to mind-based feelings, but feelings can also be converted to body-based emotions:

Emotions create biochemical reactions in our body altering our physical state. They originally helped our species survive by producing quick reactions to threat or reward. Emotional reactions are coded in our genes and while they do vary slightly individually and depending on circumstances, they are generally universally similar across all humans and even other species. Emotions precede feelings, are physical, and instinctual. Because they are physical, they can be objectively measured by blood flow, brain activity, facial micro-expressions, and body language.

Feelings originate in the neocortical regions of the brain, are mental associations and reactions to emotions, and are subjective, being influenced by personal experience, beliefs, and memories. A feeling is the mental portrayal of what is going on in your body when you experience an emotion and is the by-product of your brain perceiving and assigning meaning to the emotion. Feelings are triggered by an emotion, involve cognitive input, often subconscious, and cannot be measured precisely. But it works conversely also. For example, just thinking about something threatening can trigger an emotional fear response within your body.

In his new book ‘The Strange Order of Things’ Damasio writes that bacteria, even in their mindless existence display what can only be called a sort of ‘moral attitude’. In support of his claim, he adduces the various ways in which bacteria behave that bear a striking resemblance to the human social organization. The implication is, then, that “the human unconscious literally goes back to early life-forms, deeper and further than Freud or Jung ever dreamed of”. Damasio’s argument is that we are directly descended not only from the apes, but from the earliest wrigglers at the bottom of the primordial rock pool. Throughout history western philosophy has favored mind over body. This conception of a dualistic humanness is what Damasio wishes to dismantle. To him what the body feels is every bit as significant as what the mind thinks, and further, both functions are inextricably intertwined.

Conclusion

Emotions are a key asset of humans. They provide the link between the body and the brain. Consequently, sensing emotions with AI provides access to a person’s feelings and subsequently his mind. This raises three fundamental interrelated issues:

- Ethical concerns: As machine-intelligence and biometric and emotion sensing advance, the data being collected might bring us to the point, where a machine knows more about us than we are aware of ourselves. Is it acceptable that machine-intelligence is applied to close in on our embedded emotions and our feelings without our own consent?

- AI concerns: AI can provide valuable tools to augment or enhance cognitive tasks and advance humanity to new levels of prosperity. The potential misuse, for example by influencing our human decision-making to the benefit of a few, needs to be addressed as well. We need a strong legal framework to protect us against privacy intrusion.

- Humanitarian concerns: despite all scientific and technological progress it remains vital that we strengthen our personality and move our subconscious to a conscious level. Fostering awareness will set a barrier against Yuval Harari’s ‘Homo Deus’ scenario, where life is reduced to data and algorithms, abandoning human experience and consciousness.

Before the opening of the first major railway line in 1830, from Liverpool to Manchester using steam engines, there were fears it would be impossible to breathe while travelling at a velocity of 15mph, or that the passengers’ eyes would be damaged by having to adjust to the motion. Little more than 20 years later, their fears had been allayed, and this new form of transport marked the beginning of the first industrial revolution. To get there many financial and technical obstacles had to be overcome, and governments played a vital role in providing the social and legal framework necessary to realize its potential benefits. The adaptation of AI by our society has far-reaching consequences probably far beyond those which defined previous industrial revolutions. To reap its benefits and avoid social disruption we urgently need government comprehension and involvement.

Hello Peter, another great essay in your regular series, much appreciated, hence some feedback below.

In reference to your described emotion-feeling interaction; I recently read the newer edition of ‘Darm mit Charme’ from Giulia Enders. She outlines in a brief new chapter now also the digestive system connection, interaction and influence to the brain.

My food intake does pretty much influence emotions then later feelings. Also defines if I can cruise through a day with good energy, clear mind and set a base for good feelings or not.

Human consciousness is unlikely only based on poor rational brain efforts but a more complex, complete body experience (and very personal). AI robots will unlikely match or want to match at same scale, which should probably then not be labeled consciousness but eventually some kind of practical/functional intelligence metric.

Few weeks ago during my brothers visit on a sunny Sunday. People enjoyed, talked and did not stare at

their mobile devices as they do when commuting to/from work. We took also a cafe at Nice Place Garibaldi. My brother

mentioned that there is no police despite recent terror events. I had no answer but few minutes later

we saw that we were directly facing sophisticated video surveillance cameras (we greeted it for fun, joked that there are

still humans controlling the scenes). All the place / city is spiced with cameras. Currently monitored by humans

assisted by AI and will probably evolve also here to full AI. Then only trigger alerts when a person is profiled

to be drunk based on gestures, motions and body part, nose temperature 😉 or sound an alarm, voice advice when person attempts

to cross the street despite car traffic.

Changes are unfolding very steadily, lesser police service or post personal are on the street for human interactions. The city is supposed to destroy the recordings after some time but I would assume somewhere backups are leaked to intelligence services for longer period of storage.

Your final sentence is of high importance. Currently I only see one major initiative and baseline.

https://www.eugdpr.org/ . Many companies struggle to really implement on time but it outlines a good base.

Government should also be more proactive in ground-schools to prepare children for livelong learning in the more frequently

changing future context.

greetings Hannes