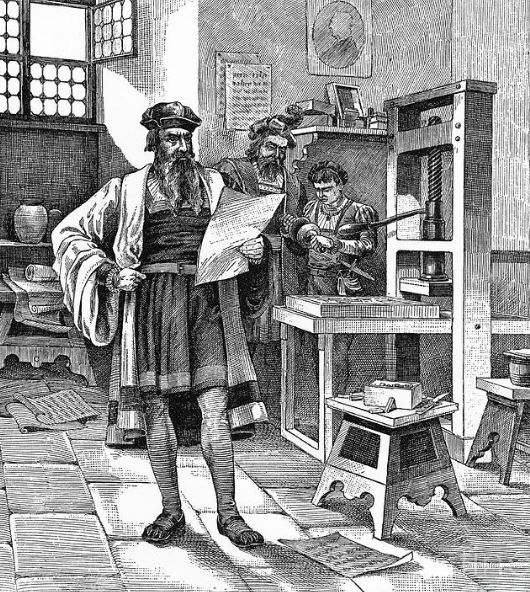

Gutenberg and his printing press in 1468 Picture Credit: Wikipedia

Gutenberg and his printing press in 1468 Picture Credit: Wikipedia

Introduction

Long before writing became an option, people communicated in what is called an oral culture. Humans are born with a natural capacity for language while most other species have little capacity for anything beyond a few basic signals. Symbolic language allowed humans to think hypothetically, to organize experiences, to conceptualize time and to plan for the future. Studies comparing human and primate language abilities using functional Magnetic Resonance Imaging (fMRI) have shown that the human brain has special segments devoted to language. In other words, we are ‘pre-wired’ to talk and listen. Unlike language, reading and writing must be learned as it is not pre-wired in the human brain. Until the printing revolution in the 1450s, most reading and writing took place among a very narrow elite in scholarly, religious or government institutions. Writing allowed humans to conserve their intellectual resources, save what needed to be saved without having to keep all the details in their heads, and devote their major energies to advancing knowledge. With the development of writing, the nature of knowledge changed dramatically and realigned human consciousness. As humans moved from a sound-based to a vision-based culture, their ways of thought and expression changed. The development of writing, with its use of a codifiable visual medium, created cultures with unique characteristics differentiating them from the earlier oral cultures. Meaning was now conveyed by carefully placed and chosen words converted through writing to a static medium. Eliminating the need for reliance on memory, writing encouraged the advance of abstract and analytic thought.

1450: Johannes Gutenberg’s Printing Press and the democratization of knowledge

Gutenberg’s printing press, developed between 1436 and 1450, introduced several key innovations to print books in a way that was efficient and economical. It marked a dramatic improvement on earlier printing methods in which cloth, paper or other media was brushed or rubbed repeatedly to achieve the transfer of ink. Typically used for texts, the invention and global spread of the printing press was one of the most influential events in the second millennium. By 1500, printing presses in operation throughout Western Europe had already produced more than twenty million books. The printing revolution marked the turning point in the transformation of a backward medieval region into modern Europe. The sharp increase in literacy broke the monopoly of the literate elite on education and learning and bolstered the emerging middle class. Publishing houses were created to produce, control, and distribute knowledge from a central location. Publishers became the new authority in justifying which knowledge was to become part of the shared global knowledge and which was not. The convergence of paper mill technology, producing cheap paper, with the engine-driven printing presses and the trains that transported daily newspapers, journals and books across the country resulted in a fundamental democratization of text-based knowledge. At the same time letter writing became the most important means of person-to-person communication. The first United States Post Office was created in 1775 and Benjamin Franklin was named the first Postmaster General. By 1828, the United States had 7’800 post offices which made it the largest postal system in the world, delivering mail reliably and on schedule.

1850: Advancing Communication Technology with analogue signal processing

About 400 years after the invention of the Printing Press, research and development of communication-related products and services started to take off. Economic and market incentives tied to growing consumer demand set the stage for the enhancement of communication with images, video and sound. The technology available relied on the handling of analogue signals, limiting the quality and capacity of information to be communicated. Yet the advantages and gains in efficiency and convenience were so significant that within a few decades several new communication applications emerged:

- Telephony:

In 1876, Alexander Bell achieved the transfer of sound along a wire and realized that the same concept could be applied to human speech as it is composed of complex sound vibrations.

- Photography:

French inventor Joseph Nicephore Niepce captured the world’s first photographic image already in 1822 with a process called heliography. Later contributions to the advancement of photography included the Kodak roll film camera, invented by the American George Eastman in 1888.

- Television:

Television made its official debut at the 1939 New York World’s Fair. As it was originally valued as a luxury appliance, radio broadcasting continued to be the favoured form of communication. By reducing cost, improving quality and expanding content, TV has become the most popular means of mass communication.

- Recording and Storage:

Analog recording is a technique used for storing analogue audio, video or data. The information is magnetically encoded and typically stored on tape. Phonographic disks became popular in the 1940’s for the distribution of analogue recorded music.

Despite its limitations, analogue communication technology opened a mass market which gained additional momentum as digitization technology emerged in the early 1950’s.

1950: Digitization to accelerate information processing and increase storage capacity

The term digitization is used when analogue signal information is converted into binary code.

A binary code represents text, computer processor instructions, or any other data using a two-symbol system. The most widely used two-symbol system consists of zeros (0) and ones (1) represented by the binary number system. The binary code assigns a pattern of binary digits, also known as bits, to each character, instruction, etc. For example, a binary string of eight bits, called bytes, can represent any of 256 possible values and can, therefore, represent a wide variety of different items. The advantage of digitization is the speed and accuracy in which this form of information can be transmitted. However, digitization can only approximate the analogue signal it represents. For example, converting analogue tape recordings of music to a CD and applying compression algorithms for reducing the volume of bits generated reduces the quality of the original soundtrack. The most important impact of digitization, however, came with the invention of the digital computer and its associated control and application software. In 1945 John von Neumann’s draft on the binary architecture and design of the EDVAC computer became the technological basis for all modern computers. In 1956, just 11 years later, IBM announced the 350 Disk Storage Unit, the first computer storage system based on magnetic recording disks and the first to provide random access to stored data. It came with fifty 24-inch disk-platters with a total capacity of 5 megabytes, hydraulically operated magnetic recording and reading units, weighed 1 ton, and could be leased for USD 3’200 per month. Starting in the mid 1950’s, due to the enormous economic benefits in cutting data handling costs and improving productivity, computing technology gave birth to a new industry segment of hardware and software companies which continues to play a major source of innovation to this day. With the introduction of the PC around 1975, digital computing became a consumer commodity, setting the groundwork for the invention of new application devices such game consoles, smartphones and tablets.

1990: Digitization of data transmission: The Internet as information exchange

Digitization of data transmission started a ‘revolution’ in mass communication in the early nineties. National Telephone Companies (TELCO’s) soon realized the potential of digital data communication over copper wires which were originally installed to provide analogue voice services to every home. By implementing ISDN as the digital communication standard and the establishment of standards for digital mobile communication, the stage was set for multimedia mass communication. The term ‘internet’ became popular in 1981 when the US Government established the TCP/IP protocol (Transmission Control Program) as standard for data transmission between computer systems. In 1989 Tim Berners-Lee and his colleagues at CERN had developed the hypertext mark-up language (HTML) and the uniform resource locator (URL) to access documents or other information sources via internet, giving birth to the first incarnation of the World Wide Web (WWW). As of today, the World Wide Web is the primary tool billions of individuals use to communicate. Providing platforms for convenience services such as on-line shopping or information-exchange services supported by social media applications, the internet has become a gigantic data and information resource. As of June 2019, there were over 4.4 billion internet users. This is an 83% increase in the number of people using the internet in just five years! 293 billion emails were sent daily in 2019. On average, Google now processes more than 40,000 searches every second (3.5 billion searches per day). All these transactions leave a digital footprint which is monetized by a few high-tech companies. Their phenomenal growth and wealth have led to many socioeconomic and data ownership issues, causing distrust und doubts regarding the long-term sustainability of WWW applications.

2010: With AI and Machine-Learning to knowledge generation

Artificial Intelligence (AI), a term which was coined in 1956 at a scientific conference at Dartmouth College, originally stipulated the hypothesis that the human brain conceptionally functions like a digital computer. In the following years the AI research community went through several iterations of failure, mainly due to unrealistic expectations and the complexities of solving specific problems with the computational models applied. With the ongoing exponential increase in computer performance it became feasible to apply the model of ‘Artificial Neural Networks (ANNs)’ to solve statistically complex optimization problems particularly in respect to pattern recognition. The ANN model represents a very crude approximation of the human brain and its neurons and synaptic network. To simulate learning, an AI technology called ‘Machine-Learning (ML)’ evolved, using ‘training data’ to build a model for decision making without being specifically programmed to make those decisions. This concept is used to recognize an object or respond to voice-activated questions, for example. Based on these early successes, ML has further evolved into ‘Deep-Learning’ by adding more and more neural layers and new processing rules, improving the quality of machine intelligence in detecting patterns or making predictions based on deviations from a given set of data. The quality or accuracy of today’s ML applications tend to improve by increasing the volume of the labelled training data sets. However, labelling the data and checking its quality can be very time-consuming and costly. Moreover, ML-applications so far lack reasoning or common sense to reach human-level AI. Despite these short-comings, ML helps to accelerate research in many domains, supporting the extraction of knowledge from experimental data.

2020: With Neuroscience to a new culture of human-level AI

To overcome the limits of ML and deep-learning, computational neuroscience has become a hot topic for reengineering the incredible intellectual capacity of the human brain and for understanding its learning and memorizing functionality. Experimental brain research of mapping brain activity in relation to behaviour combined with computational simulation of biological processes will bring us closer to human-level AI. But what happens when machines with human-level AI become reality? Let us assume that humanity succeeds in keeping the many perils AI might cause, such as cyberwar or the loss of human rights, in check, while gains, for example in climate or health management, help us to advance humanity towards a better world. Following this line of thought, it becomes obvious that our values must be implemented in AI-technology to reach the objective of advancing humanity beyond the prevailing knowledge and communication culture. Culture is an umbrella term which encompasses the social behaviour and norms found in human societies, as well as the knowledge, beliefs, arts, laws, customs, capabilities and habits of the individuals in these groups. Humans acquire culture through the learning processes of enculturation and socialization. Culture is the earliest form of intelligence outside our own minds that we humans created. Like the intelligence of a machine, culture can solve problems, fostering collective action. An examination of our relationship to culture can provide insights into what our relationship to human-level AI could be like. Culture can do things we cannot do as individuals, like fostering collective action or making life easier by providing unspoken assumptions on which we can base our lives. Human-level AI augments humans with tools that support collective intelligence for solving problems or by providing intelligence as a personal service to master the increasing complexity of our technological age. Mastering these challenges will set free human creativity, drawing on the fact that humans are more than just containers of intelligence. We have a body, we have senses, we have emotions and above all we can set the direction of our own evolution.

Conclusion

Humanity has gone through cultural shifts before, for example moving from an oral to a reading and writing culture. Considering the timeline documented by this essay, it is obvious that the cultural shift towards human-level AI happens exponentially, raising many issues as to how individuals can be motivated to adapt.

I can only hope that the advancements made in AI are to the benefit of the happiness of human beings….. that all implications are well thought over and taken into account and ethical guidelines always accompany the process….