Crowdsourcing Picture Credit: Pngimage.net

Crowdsourcing Picture Credit: Pngimage.net

Introduction: The community as foundation of our society

Community building is the creation or enhancement of individuals within a regional area or with a common need or interest. “Community is something we do together. It’s not just a container,” says sociologist David Brain. Infrastructure such as roads, water, electricity and housing sewerage provides the shell within which people live. It is within this shell that people interact, allowing them to sustain livelihoods. These activities include, but are not limited to, education, health care, business, recreation, and spiritual celebration. Collective intelligence emerges when these contributions are combined to become more than the sum of their parts for purposes ranging from learning and innovation to decision-making. The exponential progress in science is outpacing our individual capacity to solve problems. Enhancing and augmenting humans with intelligent machines with a mindset of collaboration between humans and machines is a must in assuring an even distribution of responsibility and benefits in the current evolutionary, socioeconomic process.

From Collaboration to Collective Intelligence

Humans’ ability to collaborate to achieve otherwise inaccessible goals may be one main cause for our success as a species. For mutually beneficial collaboration, individuals need cognitive mechanisms to coordinate actions with partners and mechanisms to distribute the acquired resources in a way that incentivizes partners to continue collaborating. All major communication platforms such as Microsoft-Teams or Slack have in common the ability to connect individuals to exchange information, provide administrative support to carry out tasks or to search for matching interests. It has long been recognised that when groups of people come together to solve a problem, they can become more than the sum of their parts. The ‘wisdom of the crowd’ was acknowledged as far back as Ancient Greece, when Aristotle noted that many unremarkable men often make better collective judgments than great individuals. One of the most important principles to help groups become more than the sum of their parts is diversity. Anita Woolley, a leading expert in organisational behaviour at Carnegie Mellon University, has demonstrated that gender diversity helps smaller groups to improve their problem-solving ability and that cognitive diversity is vital for creativity and learning in workplace teams. This principle also applies when it relates to larger scale collective intelligence efforts, where it may intuitively seem that diverse contributions could make it harder to distinguish quality from quantity. Collective intelligence covers a wide range of participatory methods, including crowdsourcing, open innovation, market predictions, citizen science and deliberative democracy. Some of them rely on competition, while others are built on co-operation; some create a sense of community and teamwork, while others operate based on aggregating individual contributions or microtasks. Academic research on Collective Intelligence is equally varied and draws on many different disciplines, including Social Science, Behavioural Psychology, Management Studies and Computer Science.

From Collective Intelligence to Collective Artificial Intelligence (CAI)

Recent advances in AI make harnessing this collective wisdom much easier, making us more effective at our jobs and better able to solve pressing social challenges. Collaborating usually ameliorates our work– but keeping a team focused solving a specific problem is not easy. The most important factor affecting how collectively intelligent a group can apply AI-machines is the degree of coordination among its members, says Woolley. Smart tools can be a boon in this area, which is why Woolley is working with colleagues to develop AI-powered coaches that can track group members and provide prompts to help them work better as a team. “We can have some very smart individuals working on different components in isolation, but if we don’t have coordination it is really hard to make any headway,” says Woolley. Combining AI-tools with human teams requires careful design to avoid unintended consequences. “We are still at an early stage of understanding and being able to navigate the individual reactions in terms of issues of trust and how it affects their own sense of agency. It is still really difficult to build AI with social intelligence because machines continue to struggle to pick up the nuanced social cues that guide so much of group dynamics,” she says. It is also evident from her research that these kinds of systems only work if humans truly trust AI decision-making and if users are only receiving gentle nudges from the system how to follow-through in solving a problem. “As soon as it is heavy handed, people are looking for ways to disable it,” says Woolley.

Human-Level-AI to enhance CAI-platforms

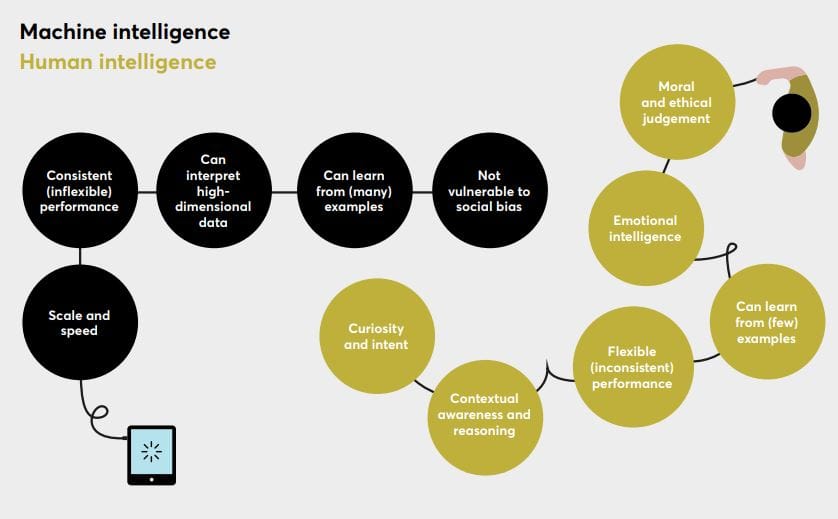

The goal of human-level-AI is to level the intrinsic capabilities of humans with the intrinsic capabilities of AI-machines. The human capacity for contextual reasoning, for example, augments the machine while the machine’s capacity to interpret high-dimensional data augments the human as depicted by the following graph (Source Nesta Foundation):

As the individual intellectual capacity of humans is limited vis-à-vis an accelerating increase in scientific and technological complexity, the application of collective intelligence augmented by AI is a major driver for future value generation. One challenge of CAI is to optimize the interface of humans with intelligent machines. Realizing the potential of value generation with new knowledge, several CAI-platforms have been launched in areas such as communal farming, community behavioural analysis or decision-making support. Common to these platforms is the implementation of AI building blocks for plotting causal connections, mapping complex relations and visually modelling complexity. The main goal behind these efforts is to generate new knowledge which, without the application of AI, will remain undiscovered.

Designing CAI-platforms

Designing a CAI-platform is an ambitious task. It needs to encompass diversity from different human talents working towards a common goal, adhering to the fact that AI technology represents a moving target. Ongoing advances in neuroscience, providing new insights about the cognitive functionality of the human brain, stipulate that AI today is not AI in six months from now. To be sustainable over a time-horizon of several years the architecture of a CAI-platform must be very modular and must leave room for adjustment to the learning experience operating the platform. The following provides a brief list of design considerations:

Defining the purpose of the platform:

The purpose of a CAI-platform is to generate new knowledge based on the exchange of information passed through the platform and as a result provide value to the individuals and organisations driving the platform. The purpose is best described by the definition of the value the community driving the platform expects to receive. In farming, for example, one value is to gain knowledge about optimizing the use of fertilizers based on the location and weather conditions at the farm. This ‘give-and-take’ of information and knowledge touches on issues of intellectual property which need to be settled together with the question under what business model the platform is being designed and operated and how the costs are reimbursed.

Making sense of data:

Today’s AI applications largely depend on the availability and quality of huge data sets to train the neural networks with supervised learning algorithms. In the future AI will increasingly apply results from neuroscience research exploring the biological functionality of the human brain in respect to learning and memorizing. Learning like a child, distinguishing between a cat and a dog, for example, stipulates the development of non-supervised learning algorithms that will greatly facilitate the application of CAI as the use of smaller data sets will significantly reduce neural network training costs.

Setting the right rules for exchanging information and skills:

Realising the benefits of a group’s diversity is only possible when there are effective mechanisms for people to access information and skills. The rules governing how group members interact with one another impacts how easily ideas spread and take hold in the group. The structure of a community network can play an important role in determining whether a group is able to exchange information efficiently. CAI relies on shared open repositories of knowledge, both generated by AI for specific problem-solving as well as publicly available as a source of collective memory.

Cultivating trust and new ways of working with machines

The application of CAI requires a high degree of trust between humans and the functionality of AI-machines. In their 2020 paper ‘How Humans Judge Machines’, Cesar Hidalgo and colleagues describe how they use large-scale online experiments to investigate how people judge responsibility in decision-making depending on whether people or machines are involved. The study found that the participants judged human decision-making by its initial intention, whereas the decisions made by AI-machines were judged by the harm/impact of the outcome. Group decision-making scenarios are more complex because the individuals are concerned about carrying responsibility for collective decisions. It is possible that AI-machines help to induce a feeling of shared ownership and consensus about a decision. However, to take full advantage of these possibilities, we need to better understand the variety of individuals’ attitudes towards AI. Sometimes automation bias – a tendency to place excessive faith in a machine’s ability – can cause professionals to dismiss their own expertise or use it as a source of validation. Interactions between people and AI are complex and more research is required to align our existing theories of trust and responsibility. With a mindset of human-level-AI, augmenting humans with intelligent machines, providing room for moral and ethical judgement in decision-making, we are moving in the right direction towards building trust. However, as experience shows, removal of human bias seems to remain one of the most difficult challenges. Recent advances in applying AI to uncover bias in data sets or algorithms might ease that concern.

Conclusion

As AI is maturing and eventually turning cognitive intelligence into a commodity, integrating human assets such as curiosity, creativity and moral judgement are a must to realize the value that can be generated with CAI-platforms. In our technology-driven society it might be advantageous to accept that semantic understanding and common-sense reasoning mirrors the experience of human existence and cannot be replaced by intelligent machines for a long time to come. Hence, strengthening human assets is the requirement to meet if we are to realize the full benefit of CAI-platforms.