Plato and Aristotle debating whether knowledge comes from experience or above

Plato and Aristotle debating whether knowledge comes from experience or above

Introduction

In a recent interview published by Forbes Magazine, Christian Voegtlin, associate professor in corporate social responsibility at Audencia Business School in France, makes the point that some Silicon Valley-based companies, including search engine giant Google, have started to employ in-house philosophers. Others, such as the instant-messaging company Skype, use the service of philosophical counsellors to engage teams of managers with philosophical questions related to their daily business. These practical philosophers are gradually entering the business world, where they are employed as de facto “chief philosophy officers” (CPOs). The job role appears to be a mixture of consultant, life coach and strategist.

The idea behind having a CPO is that the position could be helpful in a business environment that is accelerating at an unprecedented speed.

Philosophy can help to provide purpose and guidance by tackling fundamental questions about the meaning of life. It is important for answering questions relating to how we live together and treat others. CPOs can be important mediators who help overburdened managers or entrepreneurs to put things into perspective and take a step back from daily business so that they can see the bigger picture. They can also guide innovative start-ups in evaluating business goals. Moreover, philosophical thinking can help technological innovators to define the boundaries of their innovations, from privacy rights through to the degree of humanistic values that should be associated with virtual intelligence.

First experiences with CPO’s

“Philosophers arrive on the scene at the moment when bullshit can no longer be tolerated,” says Andrew Taggart, a philosophical counsellor and coach. “We articulate that bullshit and stop it from happening. And there is just a whole lot of bullshit in business today.” He cites the rise of hackers, programming “ninjas,” and thought leaders whose job identities are invented or incoherent. While psychologists aim for a therapeutic approach to solve individual mental problems, philosophical counsellors identify and dispel illusions about life through logic and reason. However, in Silicon Valley, philosophy remains a largely behind-the-scenes pursuit. Stoicism, perhaps the most popular school of thought for start-up founders, has only a handful of public adherents. ‘A Guide to the Good Life: The Ancient Art of Stoic Joy’, written by philosophy professor Bill Irvine, is popular among entrepreneurs in San Francisco as a guide for dealing with the challenges of start-up life. Irvine understands the appeal. “Stoicism was invented to be useful to ordinary people,” he says. “It is a philosophy about defining what counts as a good life.” Silicon Valley likes to see itself on a similar mission. Google offers to all its employee’s free classes with the “Search Inside Yourself” initiative, now an independent foundation devoted to encouraging focus, self-awareness, and resilience to “create a better world for themselves and others.” But Scott Berkun, a former Microsoft manager and philosophy major who has written multiple business books on the subject, says philosophy’s lessons are lost on most in Silicon Valley. “If you were to put Socrates in a room during a pitch session, I think he would be dismayed at so many young people investing their time in ways that do not make the world or themselves any better,” he says.

AI and Philosophy

Artificial intelligence has close connections with philosophy because both share several concepts such as intelligence, consciousness, epistemology, and even free will. Philosophers staked out some of the fundamental ideas of AI, but the leap to a formal science requires a level of mathematical formalization in three fundamental areas: logic, computation and probability. Philosophical questions are especially relevant to AI when human-level ‘Artificial General Intelligence (AGI)’ is being discussed. Two opposing philosophical theories on the problem give a simple yet far-reaching account where AI might be headed and how knowledge is created:

The theory of induction

We learn from observation. This is called inductive reasoning, or induction for short. We observe some phenomena and derive general principles to form a theory. Machine learning, the most important domain in AI, is inductive. Knowledge is extracted from observations. Induction is a principle of science and the prevailing theory of knowledge creation. Given enough data, all the knowledge a system needs is acquired through learning. The problem is that induction seems prone to produce bad knowledge if the quality of the data is insufficient. Nevertheless, the theory of induction is the prevailing basis of AI research with common-sense reasoning remaining one of the major obstacles towards AGI.

The theory of the power of explanations

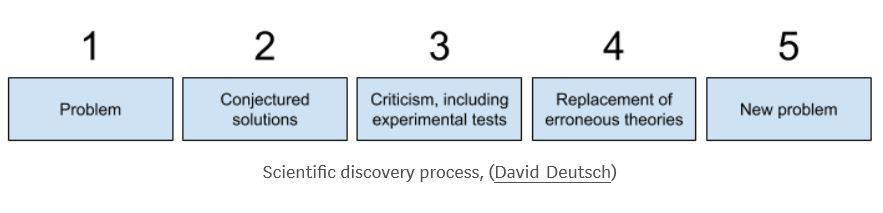

Karl Popper is one of the most influential thinkers in the philosophy of science. His solution to the problem of induction is that knowledge is in fact not derived from experience. While it is true that experience and experimentation play an important role in knowledge creation, his emphasis is on problems. Experimentation should be directed to finding evidence that refutes the theory, a process Popper defines as falsification. Although theories are never justified, they may be corroborated if they survive genuine efforts of falsification. The following graph illustrates this theory:

Time will tell how these two opposing philosophical theories will influence the ongoing development of AI. New findings from brain research might provide further input to this debate.

AI and its current limits in dealing with common-sense knowledge (CSK)

Since the earliest days of artificial intelligence, it has been recognized that common-sense acquisition and reasoning is one of the central challenges. However, progress in this area has been frustratingly slow. The importance of real-world knowledge for natural language processing and disambiguation of all kinds was discussed as early as 1960 in the context of machine translation. Almost without exception, current computer programs processing language tasks succeed to the extent that the tasks can be carried out purely in terms of manipulating individual words or short phrases, without attempting any deeper understanding. Common-sense is evaded, in order to focus on short-term results, but it is hard to see how human-level understanding can be achieved without greater attention to common-sense.

The problem of CSK is to create a database that contains the general knowledge most individuals are expected to have, represented in an accessible way to artificial intelligence programs that use natural language. Although intelligent machines can defeat the best human Go players, drive cars, answer natural language inquiries about the weather or other specific topics, they do not actually understand what they are doing. Their success has been achieved primarily through multi-level artificial neural networks (Deep Learning) which achieve their best results by gobbling up vast amounts of labelled training data (datasets) in combination with so-called ‘back-propagation’, an algorithm which is used to train neural networks to reach a specific result. Common-sense knowledge differs from encyclopaedic knowledge in that it deals with general knowledge rather than the details of specific entities. Most regular knowledge datasets contribute millions of facts about entities, such as people or geopolitical entities, but fail to provide fundamental knowledge, such as the notion that a baby is probably too young to have a doctoral degree in physics. The challenges in acquiring CSK include its elusiveness and context-dependence. Common-sense is elusive because it is scarcely implicitly expressed. Context plays an important role for common-sense in defining its correctness, and this must be accounted for while acquiring it. The trouble with common-sense applications is that one cannot experiment with it until one has a large common-sense database.

Efforts to build common-sense databases

In 1985 Doug Lenat, at that time a young professor at Stanford, started to build-up a handcrafted database called Cyc to classify and store common-sense knowledge. Since that time Lenat and his colleagues have been spending years feeding common-sense knowledge into Cyc. 34 years later, following a 2000 man-year effort, building a common-sense application with Cyc still requires a significant engineering effort. To overcome this problem researchers have started to apply datamining to find common-sense relationships from textual analysis of specific scenarios combined with neural net labelling. For example, ‘Open Mind Common Sense (OMCS)’ founded in 1999 at MIT, is an artificial intelligence project whose goal is to build and utilize a large common-sense knowledge base from the contributions of many thousands of people across the Web. The database can be used to build specific applications such as ARIA, which manages people’s personal photos based on object classifications, spatial relations, object purposes, causal relations between events, and emotions. In recent years OMCS has been enhanced with knowledge from other crowdsourced resources to build ConceptNet. This knowledge base consists of approximately 28 million statements with full support for 10 languages as a resource for AI applications to understand the meanings of the words people use. In February 2018, the Allen Institute for Artificial Intelligence announced a major, well-funded research project to advance machine common-sense with ATOMIC, an Atlas for ‘If-Then’ reasoning based on knowledge graphs. We can expect further announcements as Google and Co. enter the competition for common-sense AI applications.

Conclusion: Job Profile of the future CPO

The main-responsibility of a CPO is to provide support for interfacing human values and skills with intelligent machines in order to optimize the potential of man-machine augmentation. To reach this goal he must motivate the team-members to ask questions that are outside of the ‘corporate box’. To enhance this activity the CPO is actively engaged in building and maintaining a common-sense database, focused on ‘outside-world’ issues the company is confronted with. Equipped with the corresponding common-sense reasoning tools, the CPO acts as an advisor to the company’s board and management team on strategic issues as well as issues regarding leadership and personal development. To carry out these tasks a CPO ideally brings along the following background:

- 5 years of experience working in various functions at an AI-focused company

- Technical education with a BS in AI or information technology

- Humanity education with a MS in Philosophy

As an alternative one might consider whether it would not be better to expose future managers to philosophical thinking during their education in our business schools? This would strengthen our future business leaders’ potential for personal inquiry, logic and reasoning and could provide them with purpose and direction at a stage where their identity is not yet fully formed. Whichever path is chosen, the mindset of a CPO is required to successfully sustain the accelerating changes induced by AI in corporate development.

Yes, it would be very desirable if managers – not only of AI companies – would be exposed to ethical and philosophical thinking during their education and training……

OMCS – even my dog has grasped common sense physics: since she can’t throw balls for me (as I do for her) and when she thinks its my turn to run after the ball she lets the ball run downhill in my direction…..