![]() Avatar of Nikhil Jain, CEO & Co-founder of ObEN

Avatar of Nikhil Jain, CEO & Co-founder of ObEN

Introduction

Today’s natural language systems like Siri, Cortana, Alexa and Google-Now only understand commands, not conversations. The problem is that people cannot express complex ideas with commands; a dialog is needed to communicate effectively. Conversational AI interfaces, applying Natural Language Processing (NLP), will enable people to communicate with computers naturally, experiencing a sympathetic human-like voice-response. Combining NLP with a 3-D visual representation as avatar, an expert can communicate knowledge on-demand without being physically present. These virtual avatar-experts can be contacted, both visually and conversationally, anytime from anywhere to engage in an advisory discussion. Their behavior and speech is assembled in real-time in response to the questions asked. As technology improves, we will no longer be able to differentiate whether the expert communicating with us via internet is ‘real’ or a virtual copy.

Advances in Natural Language Processing

Natural Language Processing (NLP) refers to the way humans use words to communicate ideas and feelings, and how such communication is processed and understood. Most recent theories consider that this process is carried out entirely by and inside the brain. NLP is considered one of the most basic abilities of the human species and vital for the communication of knowledge. The Turing test, developed by Alan Turing in 1950, is a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. Conducting a general conversation via computer chat, the purpose of the test is to distinguish whether the individual responding is a human person or an intelligent machine. So far no one has succeeded in passing the test. However, enormous research efforts are on the way to crack one of the last frontiers of artificial intelligence.

In a significant step towards improving the conversational ability of intelligent systems, Google’s research division has announced a pair of new artificial intelligence experiments that let web users work with semantics and natural language processing. The first of two publicly available experiments is called ‘Talk to Books’. It lets you converse with a machine-learning trained algorithm that provides answers to questions with relevant passages from human-written text. ‘Talk to Books’ lets you make a statement or ask a question to find sentences in books that relate to the subject, without dependence on keyword matching. Asking a question like “why is the sky blue?” will deliver a number of different answers, sourced from books on the subject. But, as opposed to using standard Google Search and having to click a link and parse an article or webpage, the ‘Talk to Books’ algorithm does that work for you.

“The models driving this experience were trained on a billion conversation-like pairs of sentences, learning to identify what a good response might look like,” Ray Kurzweil, director of engineering at Google Research, explains. “Once you ask your question (or make a statement), the tool searches all the sentences in over 100,000 books to find the ones that respond to your input based on semantic meaning at the sentence level; there are no predefined rules bounding the relationship between what you put in and the results you get.” ‘Talk to Books’ is an inspiring tool to explore the web in a semantically natural way. It also gives us a glimpse of what future interfaces might look like when AI is sophisticated enough to handle almost any query we submit in real-time.

Extending NLP to 3-D Avatars, the ‘Uncanny Valley’

During a conversation our sensory channels like sight, touch, hearing, smell, taste, etc., rely heavily on visual inputs. One neurological finding states that roughly 30% of neurons in our brain are devoted to sight, whereas only 8% relate to touching and 2% to hearing. Moreover, more than 60% of the brain is somewhat involved with vision, including neurons dedicated to vision + touch, vision + motoric-control, vision + attention, and vision + spatial navigation. Consciously or not, available visual cues aid us to interact with others in a smoother and more effective way. Similarly, AI assistants with a physical body (Avatars), should be able to convey much more information than the current voice-only systems.

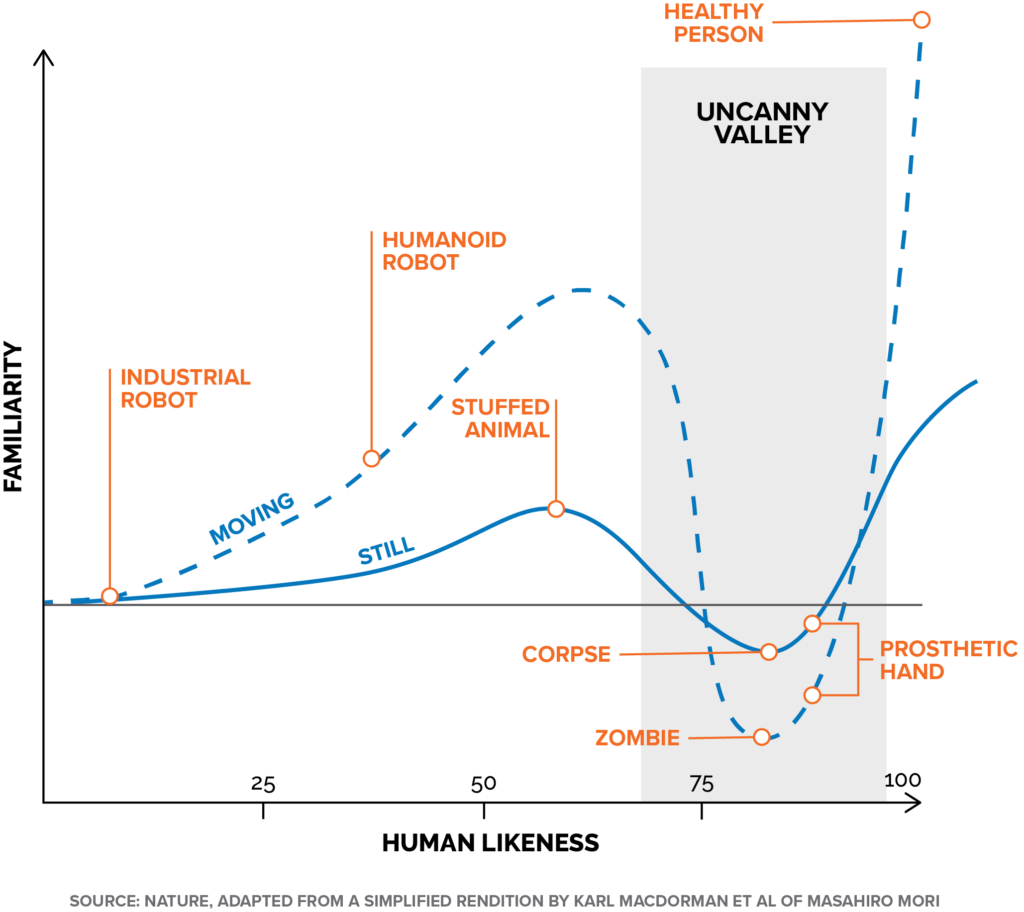

One problem to overcome is a phenomenon, referred to as ‘Uncanny Valley’, which causes observers to dislike human-like artificial representation. The ‘Uncanny Valley’ hypothesis, suggested by the roboticist Masahiro Mori, describes the negative emotional reaction of a human towards figures or prosthetics that are not quite human-like. The term emerges from the function curve of the following diagram, which illustrates the relationship between affinity and human-like appearance. The more human-like characteristics a figure has, the more likely it will be accepted. Nevertheless, at a certain point the similarity to humans causes a reverse effect, and the affinity rapidly changes to aversion, eeriness, or repulsion. The figure appears uncanny to its human observer. Only a distinctively real human, beyond the ‘Uncanny Valley’, is fully accepted by observers.

To overcome the ‘Uncanny Valley’ effect, several studies have addressed the meaning of facial features and the importance of eye contact and its role in accepting artificial faces. In a research study published in 2015, Valentin Schwind and Solveigh Jäger from Stuttgart University investigated the differences in eye movement behavior depending on the type of figures observed. Using eye tracking to study visual perception, the question was how eye contact is relevant for the categorization of negatively-rated characters and whether there are fundamental differences in observation schemes, compared to a situation whereby photos of ordinary people are observed. Their research concluded that acceptance and ways of interaction with human-like artificial figures can be significantly improved by more credible eyes and eye related areas.

Project PAI (Personal Artificial Intelligence)

Project PAI is an open-source initiative dedicated to the promotion and development of visual intelligent assistants, represented as personalized 3-D avatars. The initiative, led by ObEN, a start-up technology company specializing in the development of 3-D avatars, is funded by well-known high-tech players such as TenCent or Softbank. In their view we have entered a new era of artificial intelligence, where we can create intelligent avatars developed from our digital profiles and our knowledge base. Experts and consultants have an enormous volume of digital assets that can be refined with artificial intelligence to support a new type of an Artificial Intelligence Economy. In this humanistic platform, every person’s AI asset is a node on a network. These nodes cooperate with each other in order to generate value which can be compensated. The higher the contribution to the network, the greater the compensation.

The mission of Project PAI is to enable any person in the world to create their own unique PAI, that can perform activities on its behalf. It acts as a direct and verified representative of you in the digital world. It shares your voice, your appearance, and your personality. Your PAI will be secured with PAI blockchain encryption and created using biometric data and online behavior profiles. You own your PAI, you control what it does, and you are fairly compensated for the interactions your PAI has with other entities. It is an intelligent copy of you that can speak in your voice, including in foreign languages that you don’t know. You can send it to meetings to give presentations, or simply have it participate by listening and taking summary notes while you focus on more essential tasks. Think of your PAI as your digital twin, an intelligent avatar that knows what you like. It supports you to accomplish tasks or generates income from your expertise.

Conclusion

Project PAI promotes the distribution of intelligent avatars as a means of virtual social interaction via internet. Time will show if humans really seek this kind of encounter. Securing it with blockchain is certainly a worthwhile effort in response to the Facebook scandal and its unchallenged business model of monetizing private data of individuals without compensation. One effect of the scandal might well be that humans resort more to personal, individual encounters, reducing the urge of sharing one’s life experiences with everyone via internet.

From a business point-of view, however, intelligent avatars as virtual copies of experts, conversationally sharing their knowledge with customers, have enormous potential. To produce these intelligent avatars without the ‘Uncanny Valley’ effect will be costly. To design a professional, conversational high-quality 3-D avatar that reacts intelligently to a customer’s question in real-time is a major engineering effort. Various start-up companies in cooperation with established AI market players, will provide tools to accomplish this task. A machine-driven expert and his ability to appear in multiple copies via internet, will bring down the cost of professional advice to a fraction of what it is today. With a total value of around $250 billion in 2016, the global consulting sector is one of the largest and most mature markets within the professional services industry. The first movers to build an avatar-based consulting company are likely to disrupt the entire global consulting sector.

Hello Peter, great, very educative and relevant essay. thank you.

Yes, importance of security (e.g. block-chain) and conversation auditing (e.g. PAI ranking credentials) will be very crucial wit PAI’s. Major difference to direct human 1:1 or small group conversations is the exponential scale. The PAI may have conversations with an ‘infinite’ number of humans or other PAI’s.

PAI’s will be able to absorb and process the vast conversation amount into continued AI big-data learning, humans much less (hence need the ‘PAI tool’). PAI’s will probably not need to sleep 😉 if not set to idle by the human and could operate non-stop with high performance.

Potential deadlock or discrimination of individual PAI evolution may occur or be imposed (new class ‘society’ for PAI’s).

Same as with today’s information onslaught and noise produced by Bots, Internet-influencers and advertising, there would be a need to normalize to the essentials. With some implicit risk, to normalize, to only need one super PAI (worldwide) for each required expert domain, faculty. Humans will flock to, want to use the super PAI (ref. google index, text search, voice search). Investments and incentives are high also via special initiatives such as https://avatar.xprize.org/

greetings Hannes

Vielen Dank, Peter! Toller Artikel.