Picture Credit: Universidad Autónoma de Sinaloa, Mexico

Picture Credit: Universidad Autónoma de Sinaloa, Mexico

Introduction

The human brain is a product of evolution over a time-frame of millions of years. As we know, the human evolution started 7-8 million years ago in the African savannah, where an upright position and walking were significantly advantageous. The main incentive for improving manual actions and tool making could have been related to the gathering of food. Thanks to more refined methods of hunting, our ancestors were able to eat more meat, providing more calories, more proteins and essential fatty acids in their diet. The nervous system needs a disproportionally high level of energy and better quality of food was a basic condition for the evolution of the human brain. The size of the human brain has tripled during 3.5 million years; it has increased from an average of 450 cm3 to today’s average of 1350 cm3. A genetic change could have happened about 200’ 000 years ago, which influenced the development of our nervous system, the sensor motor function and our learning ability. Since the time of Hippocrates and Herophilus, scientists have placed the location of the mind, emotions and intelligence in the brain. For centuries, this theory was explored through anatomical dissection, as the early neuroscientists named and proposed functions for the various sections of this unusual organ. It was not until the late 19th century that Camillo Golgi and Santiago Ramón y Cajal developed the methods required to look deeper into the brain, using a silver stain to detect the long, stringy cells now known as neurons and their connections, called synapses. Meanwhile thousands of neuroscientists and major research institutions are striving to understand how this huge network of neurons and their connections defines human behaviour. Functional magnetic resonance imaging (fMRI) to monitor brain activity non-invasively or the invasive monitoring of animal brains, correlating neural activity with specific tasks is supported by an ever-growing library of brain-maps that can be used to cross-reference experiments with the topology represented by high-resolution images of the biological brain. To ease the complexity of these research endeavours, AI tools have become a vital resource to create a model of the human brain’s structure and functionality. The following are some examples.

Uncovering how our brain learns by combining fMRI scans with machine-learning algorithms

As students learn a new concept, measuring how well they grasp it often depends on traditional paper and pencil tests. University of Dartmouth researchers have developed a machine-learning algorithm, which can be used to measure how well a student understands a concept based on the student’s brain activity patterns. Through the course of learning, students develop a rich understanding of many complex concepts. Presumably, this acquired knowledge must be reflected in new patterns of brain activity. “We currently don’t have a detailed understanding of how the brain supports this kind of complex and abstract knowledge, hence the reason for this study”, said senior author David Kraemer, an assistant professor of education at Dartmouth College. Twenty-eight Dartmouth students participated in the study, broken into two equal groups: engineering students and novices. Engineering students had taken at least one mechanical engineering course and an advanced physics course, whereas novices had not taken any college-level engineering or physics classes. At the start of the study, participants were provided with a brief overview of the different types of forces in mechanical engineering. In an fMRI scanner, they were presented with images of real-world structures (bridges, lampposts, buildings) and were asked to think about how the forces in a given structure balanced out to keep the structure in equilibrium. The study found that while both engineering students and novices use the visual cortex similarly when applying concept knowledge about engineering, they use the rest of the brain very differently to process the same visual image.

In cognitive neuroscience, studies on how information is stored in the brain often rely on averaging data across participants within a group, and then comparing their results to those from another group (such as experts versus novices). For this study, the Dartmouth researchers wanted to devise a data-driven method, which could generate an individual “neural-score” based on the brain activity alone, without having to specify which group the participant was a part of. The team created a new method called an informational network analysis, a machine-learning algorithm which produced neural-scores that significantly predicted individual differences in knowledge of specific engineering concepts. The informational network analysis could also have broader applications, as it could be used to evaluate the effectiveness of different teaching approaches. The research team is currently testing the comparison between hands-on labs versus virtual labs to determine if either approach leads to better learning and retention of knowledge over time.

Creating a digital brain-map to understand the functioning of the brain

To accelerate neuroscience research worldwide, the Allen Institute for Brain Science was launched in 2003 with the aim of releasing publicly available maps of the brain. Today, neuroanatomy involves the most powerful microscopes and computers. Viewing synapses, which are only nanometers in length, requires an electron microscope imaging a slice of brain thousands of times thinner than a sheet of paper. To map an entire human brain would require 300’000 of these images, and even reconstructing a small three-dimensional brain region from these snapshots requires roughly the same supercomputing power it takes to run an astronomy simulation of the universe.

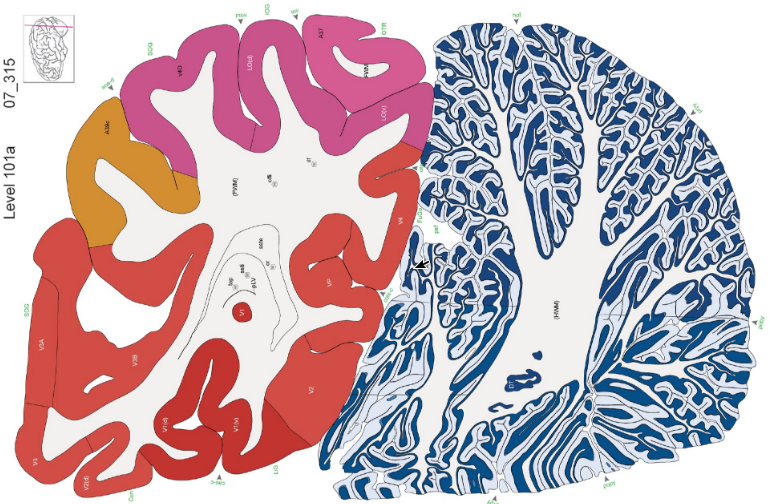

Digital image of a human brain slice from the Allen Brain Atlas

Sparked by the ongoing efforts of the Allen Institute and its collaborators, scientists at the University of Chicago and Argonne National Laboratory are combining new techniques in microscopy, neurobiology and computing to reveal the brain’s inner mechanisms in unprecedented detail. A diamond knife with an edge only five atoms thick cuts 50-nanometer-thin slices of a human brain which float away on water to a conveyer belt that takes them sequentially beneath the gaze of an electron microscope. Scientists estimate that the brain contains nearly 100 billion neurons, the basic type of a brain cell. Each of those neurons makes tens of thousands of contacts with other cells, bringing the number of connections into the quadrillions, or a million billion. A complete map of these connections—sometimes called the connectome—would be nothing less than the largest dataset ever created. The connectome represents the wiring diagram of the brain. It is a physical map of all the neurons in the brain – or indeed the whole nervous system – and the synaptic connections between them. Within that massive inventory could lie answers to some of the most elusive scientific questions: the fundamental rules of cognition, explanations for many mental illnesses, even the biological factors that separate humans from other animals. “It is a huge theory of neuroscience that all of our behaviours, all of our pathologies, all of our illnesses, all of the learning that we do, is all due to changes in the connections between brain cells,” says Narayanan Kasthuri, assistant professor of neurobiology at the University of Chicago and neuroscience researcher at Argonne.

Labelling digital brain-maps with the support of deep-learning algorithms

Brain-maps are invaluable for connecting the macro (the brain’s architecture) to the micro (genetic profiles, protein expression, neural networks) across space and time. Scientists can now compare brain images from their own experiments to a standard brain-map resource such as the Brain Atlas of the Allen Institute for Brain Science. This is a critical first step in developing algorithms that can spot brain tumours or understanding how depression changes brain connectivity. We are literally in a new age of neuro-exploration. But dotting neurons and drawing circuits is just the start. To be truly useful, brain-maps need to be fully annotated. Just as early cartographers labelled the Earth’s continents, a first step in annotating brain-maps is to precisely parse out different functional regions. Unfortunately, microscopic neuroimages look nothing like brain anatomy colouring books. Rather, they come in a wide variety of sizes, rotations, and colours. The imaged brain sections, due to extensive chemical pre-treatment, are often distorted. To ensure labelling accuracy, scientists often must hand-annotate every single image. Like the effort of manually labelling data for machine learning, this procedure creates a time-consuming, labour-intensive bottleneck in neuro-cartography endeavours. To overcome these limitations, a team from the Brain Research Institute of the University of Zurich uses the processing power of AI to take over the time-consuming job of “region segmentations”, introducing a fully automated deep neural network-based method for registration through segmenting brain regions of interest with minimal human supervision. Thanks to deep- learning, the difficult business of making annotated brain-maps just got a lot easier. The team fed a deep neural net with microscope images of whole mouse brain segments, which were “stained” with a variety of methods and a large pool of different markers. Regardless of age, method, or marker, the algorithm reliably identified dozens of regions across the brain, often matching the performance of human annotation.

Conclusion

Artificial intelligence has been borrowing knowledge relative to the brain’s functionality since its early days, when computer scientists and psychologists developed algorithms called neural networks that loosely mimicked the brain. Those algorithms were frequently criticized for being biologically implausible – the “neurons” in neural networks were, after all, gross simplifications of the real neurons that make up the brain. Complementing the effort to model neural activity in the brain through biological experiments, one can train deep-learning networks to solve problems the brain needs to solve. As a result, our knowledge of the biology of human brains and its AI data-scientific interpretation sets the stage for solving one of the last mysteries of human physiology. To successfully master this next step of evolution will require ethic guidelines way beyond the issues we are facing today.