Picture Titel: HUMAN Picture Credit: deepdreamgenerator.com

Picture Titel: HUMAN Picture Credit: deepdreamgenerator.com

Introduction

Patricia Churchland is the doyenne of neuro-philosophers. She believes that to understand the mind, one must understand the brain, using evidence from neuroscience to refine concepts such as free will. The problem Churchland writes, is that deep down we are all dualists. Our conscious selves inhabit the world of ideas; our brains, the world of objects. So deep is this split that we find it hard to accept an intimate relationship between the mind and the brain. To overcome this barrier, one can resort to psychology, addressing issues like perception, intelligence and consciousness. Self-reflection is one of the outstanding features of consciousness. Dreaming through bizarre and possibly threatening scenarios is an effective method of utilising our subconscious. Consciously or subconsciously, we are engaged in a creative process resolving an inner conflict.

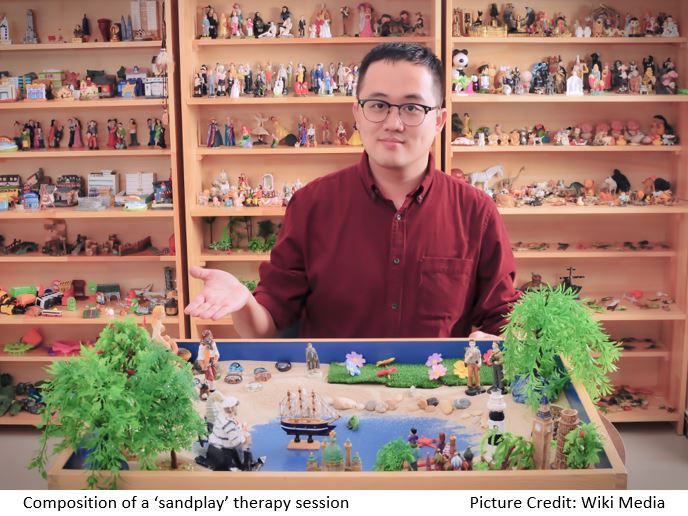

A therapeutic approach to support this process has been developed by the psychoanalyst Dora Kalff. Based on the analytical psychology of Swiss psychiatrist C.G. Jung, she has developed a ‘sandplay’ therapy, a nonverbal, therapeutic intervention that makes use of a sandbox, toy figures, and sometimes water, to create scenes of miniature worlds that reflect a person’s inner thoughts, struggles, and concerns.

This form of play therapy is practiced along with talk therapy, using the sandbox and figures as communication tools. A series of ‘sandplay’ images portrayed in the sand tray create an ongoing dialogue between the conscious and the subconscious aspects of an individual’s psyche, thereby activating a healing process to support the development of one’s personality. This creative process can be simulated by Generative Adversarial Networks (GANs), supporting an inner, self-reflecting dialogue between the images produced and the conscious experience of viewing them.

What are GANs?

Generative Adversarial Networks (GANs) are a combination of two neural networks, pitting one against the other (hence the term ‘adversarial’). The concept was introduced in a paper by Ian Goodfellow and other researchers at the University of Montreal in 2014. Referring to GANs, Facebook’s AI research director Yann LeCun called adversarial training “the most interesting idea in the last 10 years in AI machine learning.” GANs’ potential is huge, because they can learn to mimic any distribution of data. GANs can be taught to create worlds similar to our own in many domains: images, music, speech or prose. They are robot artists in a sense, and their output is impressive. At a widely publicized event, Christie’s auctioned and sold a portrait for USD 432,000 that had been generated by a GAN and an inkjet printer. The open-source code was originally written by Robbie Barrat of Stanford. Art experts at Obvious, a French company, used the GAN to generate the portrait. The question still looms who owns the rights to the portrait: the buyer, the programmer, the GAN algorithm or the company Obvious?

The capability of GANs to create photographic images of artificial faces which cannot be distinguished from faces of real individuals has raised concerns about the generation of fake content, an issue which needs to be addressed in the ongoing AI-Ethics debate.

An example of how GANs work

The two subnetworks of a GAN are titled ‘generator’ and ‘discriminator’. The discriminator has access to a set of images (training images). The discriminator tries to discriminate between ‘real’ images of the training set and ‘fake’ images generated randomly by the generator. The generator receives a signal from the discriminator whether the image generated can be classified as ‘real’ or ‘fake’. At equilibrium the discriminator should not be able to tell the difference between the images generated by the generator and the actual images of the training set, hence the generator succeeds in generating images that correspond to the training set with no more ‘fake’ images generated.

GANs are a very active topic of AI-research and there have been many different types of GAN implementations. Over 100 variants of GANs were introduced in 2017 alone. Major recent GAN applications include generating images with increasingly high resolution, image classification with only a small number of labelled samples or transforming existing imagery such as refining a synthetic ‘toy image’ into a more realistic one.

A thought model about the analogy between human consciousness and GANs.

GANs are a potentially useful analogy for understanding the human brain. In human brains, the biological neurons responsible for encoding perceptual information, serve multiple purposes. For example, the neurons that fire when you see a cat also fire when you imagine or remember a cat. So, whenever there is activity in our neural circuitry, the brain needs to be able to figure out the cause of the signals, whether internal or external. John Locke, the 17th-century British philosopher, believed that we had some sort of inner organ that performed the job of sensory self-monitoring. But critics of Locke wondered why Mother Nature would take the trouble to grow a whole separate organ, on top of a system that is already set up to detect the world via the senses. In light of what we now know about GANs, Locke’s idea makes a certain amount of sense. We need a discriminator to decide when we are seeing something versus when we are merely thinking about it. This GAN-like inner sense organ – or something like it – needs to be there to act as an adversarial rival, to distinguish between perception and imagination. If the perceptual signal from the generator says there is a cat, and the discriminator decides that this signal truthfully reflects the state of the world right now, we indeed see a cat. To the extent that the discriminator gets things right most of the time, we tend to trust it. When there is a conflict between subjective impressions and rational beliefs, it seems to make sense to believe what we consciously perceive and experience. Likewise, GANs can emulate this process of human conscious experiences as an interplay between generator and discriminator.

From G.C. Jung’s inspired ‘sandplay’ therapy towards the application of GANpaint

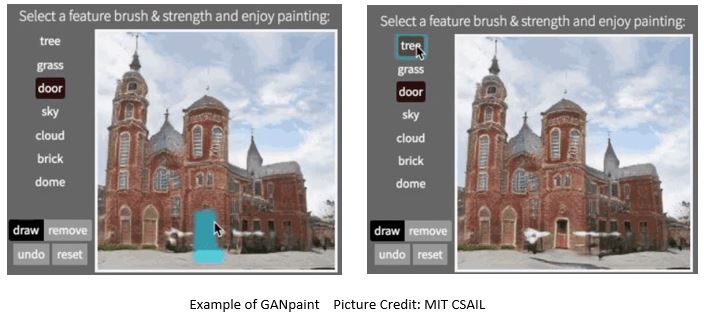

Researchers from the MIT-IBM Watson AI Lab have been working on the idea that GANs could be programmed to paint what they are “thinking”. By performing this task, GANs could provide insights into how biological neural networks learn and reason. The researchers began probing a GAN’s learning mechanism by feeding it various photos of scenery – trees, grass, buildings and sky. They wanted to see whether the GAN would learn to organize the pixels into sensible groups without being explicitly told how. Stunningly, over time, it did. By turning “on” and “off” various artificial “neurons” of the deep-learning network, asking the GAN to paint what it “thought”, the researchers found distinct neuron clusters that had learned to represent a tree, for example. Other clusters represented grass, while still others represented walls or doors. Based on the interplay between generator and discriminator, the GAN had managed to group tree pixels with tree pixels and door pixels with door pixels regardless of how these objects changed colour from photo to photo in the training set. Not only that, but the GAN seemed to know what kind of door to paint depending on the type of wall pictured in an image. It would paint a Georgian-style door on a brick building with Georgian architecture, or a stone door on a Gothic building. It also refused to paint any doors on a piece of sky. Without being told, the GAN had somehow grasped certain unspoken truths about the world. Being able to identify which clusters correspond to which concepts had made it possible to control the neural network’s output. The team has now released an app called GANpaint that turns this newfound ability into an interactive artistic tool. Beyond its entertainment value the app also demonstrates the greater potential of this research. The app works by directly activating and deactivating sets of neurons in a deep network trained to generate images. Each button on the left (“door”, “brick”, etc) corresponds to a set of 20 networked neurons.

Extending the GANpaint concept, one could envision a data set of miniature figures and images of landscapes as they are used in the ‘sandplay’ therapy. Interactively composing scenarios that reflect the subconscious state of the player generates a visual feedback to provide a conscious experience of his emotional state. Creating a library of these composed images might serve as a communication tool to practice personality development with the on-line support of a trained therapist, moving subconscious problems to consciousness and as one result improve the players decision-making capability.

Conclusion

In our decision-making process, we seek external information to guide us. This includes the advice and opinions from people we know, people with expertise in the field or people we can trust.

Knowing ourselves better enables us to properly assess the value of advice or information we have been given. To foster consciousness is vital if we are to maintain our mental independence in a digital world where fake information and malicious influencing are threatening the very foundation of our democratic society. Augmenting humans by applying GAN concepts can also improve conscious experience as a means of making decisions against the destructive use of AI.

Individuals who make right decisions consistently may not be the ones who are the smartest or the luckiest. They are the ones who thoroughly understand themselves.