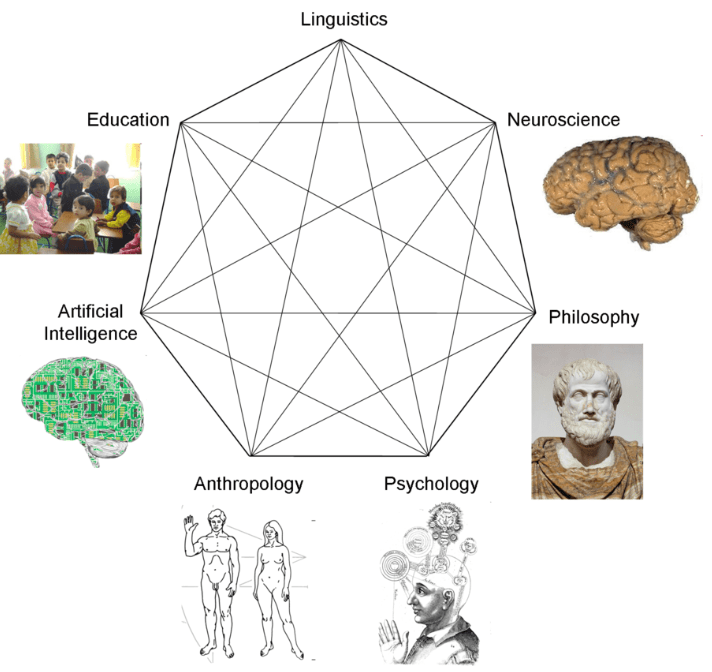

Picture Credit: Wikipedia

Introduction

Continuous progress in artificial intelligence (AI) is raising expectations to build systems that learn and think like people. Many advances have come from using deep neural networks trained end-to-end in tasks such as object recognition, language translation or board games, achieving performance equal or even superior to that of humans in some respects.

The success of neural networks has captured attention beyond academia. In industry, companies such as Google and Facebook have active research divisions exploring these technologies, and object and speech recognition systems based on deep learning have been deployed in core products on smart phones and the web. The media has also covered many of the recent achievements of neural networks, often expressing the view that neural networks have achieved this recent success by virtue of their brain-like and calculation ability to emulate human learning and human cognition.

Despite their biological inspiration and performance achievements, these systems differ from human intelligence in crucial ways. One source of inspiration to advance AI to a level closer to human thinking comes from Geoffrey Hinton, Professor at the University of Toronto and a Google researcher. After years of research Hinton has finally released details of his new “capsule” theory which significantly impacts current machine learning theory.

Another source of inspiration comes from Joshua Tenenbaum, Professor at MIT’s Department of Brain and Cognitive Sciences, engaged in reverse engineering the human mind. Progress in cognitive science, for example, is suggesting that truly human-like learning and thinking machines will have to surpass current engineering trends in both what they learn, and how they learn it.

Hinton’s new theory: Replace Backpropagation with Capsule Networks

Geoffrey Hinton is also known as the father of “deep learning.” Back in the 50s the idea of deep neural networks began to surface and, in theory, could solve a vast amount of problems. However, nobody was able to figure out how to train them and development slowed. Hinton didn’t give up and in 1986 showed that a method called backpropagation could train these deep networks. However, because of the lack of computational power previously encountered it wasn’t until 5 years ago in 2012 that Hinton was able to demonstrate his breakthrough which set the stage for this decade’s progress in AI. In backpropagation, labels or “weights” are used to represent a photo or voice within a brain-like neural layer. The weights are then adjusted and readjusted, layer by layer, until the network can perform an intelligent function with the fewest possible errors. But Hinton suggests that for neural networks to become intelligent on their own, something which is also known as “unsupervised learning”, one has to get rid of backpropagation. ”I don’t think it is how the brain works,” he said. “We clearly do not need all the labeled data.”

On October 26, 2017, he has released a paper on a new groundbreaking concept called Capsule Networks. Speaking on the sidelines of an AI conference, Hinton said he is now “deeply suspicious” of backpropagation, the workhorse method that underlies most of the advances we are seeing in the AI field today, including the capacity to sort through photos and talk to Siri. “My view is to throw it all away and start again,” he said. Hinton argues that in order to correctly do classification and object recognition, it is important to preserve hierarchical pose relationships between object parts. This is the key intuition that will allow us to understand why capsule theory is so important.

When these relationships are built into internal representation of data, it becomes very easy for a model to understand that the thing that it sees is just another view of something that it has seen before. Consider the image below. You can easily recognize that this is the Statue of Liberty, even though all the images show it from different angles. This is because internal representation of the Statue of Liberty in your brain does not depend on the view angle. You have probably never seen these exact pictures of it, but you still immediately recognize it.

In this sense, the capsule theory is much closer to what the human brain does in practice. The human brain needs to see only a couple of dozens of examples, hundreds at most. Existing Neural Networks on the other hand, need tens of thousands of examples to achieve very good performance, which seems like a brute force approach that is clearly inferior to what we do with our brains.

Tenenbaum’s Theory: Human thought is fundamentally a model-building activity.

Joshua Tenenbaum and his colleagues at MIT make the distinction between two different computational approaches to intelligence: a) the pattern recognition approach which treats prediction in the context of a specific classification and regression of data as typically computed with deep learning algorithms or b) an alternative approach pursued by Tenenbaum which deals with models where learning is the process of model-building. Cognition is about using these models to understand the world, to explain what we see, to imagine what could have happened that didn’t, or what could be true that isn’t, and then planning actions to make it so. The difference between statistical pattern recognition and model-building can be viewed as the distinction between prediction and explanation. According to Tenenbau, humans, early in development, have a foundational, intuitive understanding of several core domains which he defines as ‘start-up’ software with cognitive capabilities. These domains include number (numerical and set operations), space (geometry and navigation), physics (inanimate objects and mechanics) and psychology (individuals and groups). Based on observation of children’s behavior, his research shows the following:

Intuitive physics

Young children have rich knowledge of intuitive physics. Whether learned or innate, important physical concepts are present at ages far earlier than when a child or adult learns to play an interactive game, for example. Infants have primitive object concepts that allow them to track objects over time and allow them to discount physically implausible trajectories. For example, infants know that objects will persist over time and that they are solid and coherent. Equipped with these general principles, children can learn more quickly and make more accurate predictions. While a task may be new, physics still works the same way. At around 6 months, infants have already developed different expectations for rigid bodies, soft bodies and liquids. Liquids, for example, are expected to go through barriers, while solid objects cannot. By their first birthday, infants have gone through several transitions of comprehending basic physical concepts such as inertia, support, containment and collisions.

Intuitive psychology

Intuitive psychology is an ability which manifests itself at an early stage with an important impact on human learning and thought. Infants understand that other people have mental states like goals and beliefs, and this understanding strongly constrains their learning and predictions. A child watching an expert play a new videogame can infer that the game’s avatar has a personality and is trying to seek reward while avoiding punishment. This inference allows the child to infer what objects are good and what objects are bad. These types of inferences further accelerate the learning of new tasks. It is generally agreed that infants expect persons to act in a goal-directed, efficient, and socially sensitive fashion. There is less agreement on the computational architecture that supports this reasoning and whether it includes any reference to mental states and explicit goals.

Learning as rapid model building

While there are many perspectives on learning, Tenenbaum and his colleagues see model building as the hallmark of human-level learning by explaining observed data through the construction of causal models of our environment. Compared to state-of-the-art algorithms in machine learning, human learning is distinguished by its richness and its efficiency. Children come with the ability and the desire to uncover the underlying causes of sparsely observed events and use that knowledge to learn and to build new models with their own minds. Humans have a much richer starting point than neural networks when learning most new tasks, including learning a new concept or to play a new video game.

Conclusion

While the pace of progress in AI has been impressive, natural intelligence is still by far the best example of intelligence. Machine performance may rival or exceed human performance on particular tasks, but it does not follow that the algorithm learns or thinks like a person. When comparing people and the current best algorithms in AI and machine learning, people learn from less data and generalize in richer and more explicable ways. Deep learning and other learning paradigms can move closer to human-like learning and thought if they incorporate psychological ingredients or new concepts of data representation as demonstrated by Hinton’s new capsule network. Combining both approaches is likely to accelerate the quest to reach singularity. Model building is a creative act to provide answers to questions raised by humans. To ask good questions will continue to remain the key contribution humans will make in an age of singularity. To do so however, humans need a comprehensive understanding of our cultural heritage, an important message to our educational institutions. AI will augment human intelligence but it cannot replace it.

Peter, thank you for this excellent and well researched essay. I did not yet know about the recent

momentum on Capsule network (G.Hinton) and based on your writing seeked on github, which has

already a tensoflow module https://github.com/naturomics/CapsNet-Tensorflow.

Then, your reference J. Tenenbaum on pattern and model building, I can only confirm same as probably most

people concious about their learning journey, that these are prime/key methods to map complexity temporary or

permanent into evolving patterns and models.

best regards Hannes