Nature rebounds Picture Credit: pxhere.com

Nature rebounds Picture Credit: pxhere.com

Introduction

For many the COVID-19 virus outbreak marks the first experience of fear and anxiety as a pervasive mood. As a society we are afraid of fear, and most of the time we can turn our backs on it. But this kind of denial does not work in a pandemic. As bad news mounts daily and society becomes ever more anxious, countless people become emotionally paralyzed by fear without knowing how to escape. Social forces can drive one towards fear, but society cannot get you out of it. Escape is something each person must handle on their own. AI can contribute in many ways to fight off the devastating impact of a pandemic. Motion and location sensor data, supported by AI analysis, can bring an outbreak under control. Likewise, everyone is challenged to raise his/her conscious awareness to reduce fear, for example through the interpretation of one’s own body sensor data such as blood sugar, blood pressure, brain activity, body temperature or sleep. Advances in AI algorithms to interpret these datasets will become a valuable resource to support individual consciousness and the ability to adapt to rapidly changing life-threatening situations. While some view the pandemic as an accelerator of digital transformation to advance health research, accelerating AI supported consciousness research is likely to gain the most in the future. To handle future pandemics effectively, the experience and lessons learned from handling COVID-19 provide guidance to societal and corporate institutions, reducing the threat of causing damage to our physical, mental and economic health.

Lesson learned 1: High performance teams need co-location not isolation

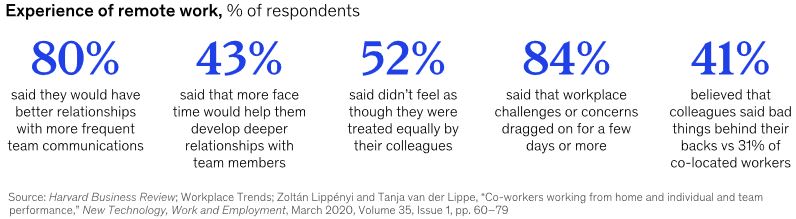

Until the outbreak of the pandemic, business models of sustainable successful organizations were very much focused on high performance teams managed with a mindset of emotional intelligence, collectively working to avoid knowledge-silos. Joint leadership-teams defined the mission and benchmarks to be met including the long- and short-term strategies to follow. Corporate governance, adherence to ethics standards as well as performance reporting were the joint responsibility of the team leaders. While an integrated corporate AI system provided support for the best course of action for resolving unforeseen problems, human judgement needed to be kept in the loop to spot machine failures. To realize this advantage, it was necessary for leaders to be able to optimally balance the interplay of intelligent machines with human assets to solve specific tasks. With this structure in place, the corporation resembles a living organism which can master the ‘survival of the fittest’ in a highly competitive environment. To support this ‘living organism’ concept, it seems obvious that working remotely from home hinders teamwork. While home-office concepts might work as a place to handle many administrative tasks, progress in digital transformation and AI will eventually automate these tasks, supporting automated decision making or automated report writing, to name just a few. The abrupt shift to remote working in response to COVID-19 is challenging the typical approach in managing high-performance teams. Traditionally, such teams thrive when team members are co-located with close-knit groups all working in the same place. Co-location allows frequent in-person contact, quickly builds trust, simplifies problem solving, encourages instant communication and enables fast-paced decision making. The sudden transition of co-located teams to a fully remote approach can reduce cohesion and increase inefficiency. The following chart, provided by a McKinsey Report in April 2020, documents this experience:

Working from home blurs the lines between professional and personal lives. Team members may feel added stress as to the impression they create on video, whether because of the appearance of their home workspace, interruptions from young children, or family members sharing the same workspace.

Lesson learned 2: Video communication cannot replace the co-location experience

Video meetings seemed to provide an elegant solution to enhance remote work, but they wear on the psyche in complicated ways. Until recently, Eichler-Levine, professor at Lehigh University in Pennsylvania, was leading live classes full of people whose emotions she could easily gauge, even as they navigated through difficult topics—such as slavery and the Holocaust—that demand a high level of conversational nuance and empathy. Now the COVID-19 pandemic has thrust her life into a virtual space and the experience is taking its toll. “It is almost like you are emoting more because you are just a little box on a screen, with the result of feeling very tired following a video session”, Eichler-Levine says. Other people are reporting similar experiences also referring to it as ‘Zoom-Fatigue’, although this exhaustion also applies with any other video-calling interface. “There is a lot of research that shows why we actually really struggle with this,” says Andrew Franklin, an assistant professor of cyberpsychology at Virginia’s Norfolk State University. During a person-to-person conversation, the brain focuses partly on the words being spoken, but it also derives additional meaning from dozens of non-verbal cues, such as whether someone is facing you, slightly turns away, is fidgeting while you talk or inhales quickly in preparation to interrupt. Since humans evolved as social animals, perceiving these cues comes naturally to most of us. “We are engaged in numerous activities, but never fully devoting ourselves to focus on anything in particular,” says Franklin. This leads to problems as video group meetings become less collaborative and more like siloed panels, as only two people talk at a time, while the rest listens. If you view a single speaker at a time, you cannot recognize how non-active participants are behaving—something you would normally pick up with peripheral vision. This point was underscored last week in an opinion article for the Wall Street Journal where Stanford professor Jeremy Bailenson outlined why video conferencing on Zoom can feel so exhausting. In his view, Zoom forces people to display behaviors that are usually reserved for close and intimate relationships – what he refers to as “nonverbal overload” such as making direct eye contact for long periods of time or focusing on someone’s face. In the real world, Bailenson points out, individuals can control their own personal space, manage their distance from coworkers, and choose their location during a meeting. On Zoom, however, there is no concept of spatial distance since the experience exists in a flat 2D window.

New 3D virtual reality concepts such as the application of holography might improve remote communication, fostering some sense of togetherness during a pandemic. However, these concepts cannot replace the emotional energy and creativity generated by individuals working in co-location environments.

Towards AI that supports human consciousness

During the 2020 virtual ‘International Conference on Learning Representations (ICLR)’ last week, Turing Award winner and director of the Montreal Institute for Learning Algorithms, Yoshua Bengio, provided a glimpse into the future of AI and machine learning techniques. One of those was ‘attention’, the mechanism by which a person (or algorithm) focuses on a single element or a few elements at a time. Many machine learning model architectures like Google’s Transformer are built on the assumption that people have limited attention resources and information is distilled down into the brain’s memory without meaning. Models with attention, however, have achieved state-of-the-art results in domains like natural language processing and they could become the foundation of enterprise AI that assists employees in a range of cognitively demanding tasks. During the conference Bengio also referred to the theory proposed by psychologist and economist Daniel Kahneman in his seminal book ‘Thinking, Fast and Slow’, defining two systems of thinking. The first type is unconscious — it is intuitive and fast, non-linguistic and habitual, and it deals only with implicit types of knowledge. The second is conscious — it is linguistic and algorithmic, and it incorporates reasoning and planning, as well as explicit forms of knowledge. An interesting property of the conscious system is that it allows the manipulation of semantic concepts that can be recombined in novel situations, which Bengio noted is a desirable property in AI and machine learning algorithms. Current machine learning approaches have yet to move beyond the unconscious to the fully conscious, but Bengio believes this transition is well within the realm of possibility. He pointed out that neuroscience research has revealed that the semantic variables involved in conscious thought are often causal. It is also now understood that a mapping between semantic variables and thoughts exists, like the relationship between words and sentences. Attention is one of the core ingredients in this process, Bengio explained. Following this concept, he and colleagues are proposing ‘Recurrent Independent Mechanisms (RIMs)’, a new model architecture in which multiple groups of artificial neurons operate independently, communicating only sparingly through attention. “Consciousness has been studied in neuroscience with a lot of progress in the last couple of decades. I think it is time for machine learning to consider these advances and incorporate them into machine learning models”.

The limits of current AI simulating emotions and feelings

As scientific discovery further reveals the computational and emotional complementarities that constitute the marvel of the biologically grounded human mind, it will also mark the limits of artificial intelligence. Technologists across the world have embarked on the quest to create a new species in our own image — general artificial intelligence with superior computational brain power. But we are only just now beginning to understand the foundations of human intelligence and consciousness. They cannot be captured in an algorithmic formula divorced from the functions of the body and the long evolution of our species and its microbiome, as Antonio Damasio, neuroscientist and Professor at the University of Southern California argues in a recent interview, discussing his new book ‘The Strange Order of Things’. Feelings and emotions are constitutive of human intelligence, consciousness and the capacity for creativity. In short, a map of the computational mind is not the territory of what it means to be human. “Our minds operate in two registers,” Damasio explains. “In one register, we deal with perception, movement, memories, reasoning, verbal languages and mathematical languages. This register needs to be precise and can be easily described in computational terms. This is the world of synaptic signals that is well captured by AI and robotics. But there is a second register that pertains to emotions and feelings that describes the state of life in our living body and that does not lend itself easily to a computational account. Current AI and robotics do not address this second register.”

Conclusion

The COVID-19 pandemic has caught humanity largely unprepared. While its devasting effects will be felt for a long time, the potential contribution of AI to better handle the outbreak and control of future pandemics is very much apparent. Economic lock-down, isolation and social distancing might be the only way to get back to ‘normal’ today. In the future we need far more refined and educated ways of reacting to a pandemic. While our technical means will improve over time, raising consciousness to battle fear is a must.