“The intuitive mind is a sacred gift and the rational mind is a faithful servant. We have

created a society that honours the servant and has forgotten the gift.” Albert Einstein

Introduction

It is impossible not to associate the success of Apple and its products with its co-founder, Steve Jobs. His ability to create very popular products while cementing Apple as a premier brand is well recognized. The power of intuition was something he became fascinated with when he visited India in his younger days, much before Apple became a company, and he credits intuition for having a major impact on his work in his autobiography, written by Walter Isaacson. In his famous commencement address given at Stanford University in 2005, he urged the students to trust their intuition when moving their lives forward.

Many consider the division between analytic and intuitive thinking as opposites. However, a 2015 meta-analysis – an investigation where the impact of a group of studies is measured – has shown that analytic and intuitive thinking are typically not correlated and could happen at the same time. So, while it is true that one style of thinking likely feels dominant over the other– especially in analytic thinking – the subconscious nature of intuitive thinking makes it hard to determine exactly when it occurs. Research suggests that the brain is a large pattern recognizing biological system, constantly comparing incoming sensory information and current experiences against stored knowledge and memories of previous experiences. Our intuition has been developing and expanding throughout our lifetime. Every interaction, happy or sad, is catalogued in our memory. When we subconsciously spot patterns, the body starts firing neurochemicals in both the brain and gut. These “somatic markers” give us that instant feeling that something is right or wrong. Not only are these automatic processes faster than rational thought, but our intuition draws from decades of diverse qualitative experience (sights, sounds, interactions, etc.) – a human feature that AI and deep-learning so far are unable to match.

What is Intuition?

According to Wikipedia, intuition is the ability to acquire knowledge without proof, evidence or conscious reasoning. Different writers give the word ‘intuition’ a great variety of different meanings, ranging from direct access to unconscious knowledge to the ability to understand something instinctively, without the need for conscious reasoning.

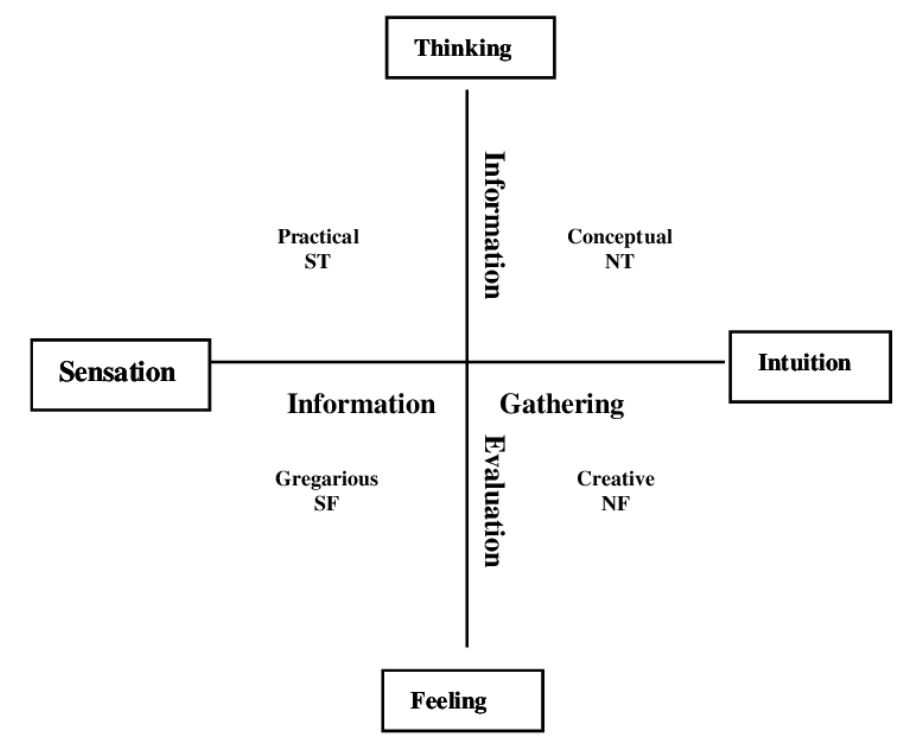

In Carl Jung’s theory of the ego, intuition is an ‘irrational function’, opposed most directly by sensation, and opposed less strongly by the ‘rational functions’ of thinking and feeling as depicted by the following graph:

In Jung’s view intuition is ‘perception via the unconscious’. Using sensation as a starting point, intuition brings forth ideas or images by a process that is mostly unconscious.

According to Gerd Gigerenzer, director at the Max Planck Institute for Human Development, intuition is less about suddenly ‘knowing’ the right answer but more about instinctively understanding what information is unimportant and can thus be discarded. This inward focus requires an ‘integrative awareness’ that seeks to tune into unseen signals and messages. In his view intuition could be defined as the intelligence of the unconscious. In some respects, intuition could also be thought of as an experience of collective intelligence. For example, most web sites are organized in an intuitive way, which means they are easy for most people to understand and navigate. This approach evolved after many years of experiencing with different concepts while a common wisdom emerged over what information was superfluous and what was essential.

Intuition and Decision-Making

Most of us are used to making intuitive decisions in our daily life. As soon as subjective judgement is involved rational reasoning is very difficult to apply. Typical examples where intuition can play an important role in making decisions are: Choosing your life partner, selecting the right car to buy, evaluation of a job, decision about an education, selecting a meal when eating out, selecting the next book to read, decide how to dress for today, and so on.

Intuitive decision-making goes beyond applying common sense because it involves additional sensors to perceive and become aware of information coming from one’s inside as well as our outside. Sometimes it is referred to as gut- feeling, sixth sense, inner sense, instinct, inner voice, spiritual guide, etc. It is related to developing a higher consciousness in order to train these sensors and to make the process of receiving information intuitively a more conscious one. It is also faster than rational thought, which means intuition is a necessary skill that can help decision-making when time is short and traditional analytics may not be available. To back away from conscious thought and turn the problem over to our unconscious mind will scan a broader array of patterns and find some new close fits from other information stored in our brain. Intuition facilitates mental cross-training in a way that deep-learning and neural networks so far are unable to do. However, one must keep in mind that both – deep-learning algorithms as well as intuition – can lead to wrong conclusions. In his book ‘Thinking, Fast and Slow’ Nobel laureate Daniel Kahneman presents the following problem: A baseball bat and a ball together cost $1.10. The bat costs $1 more than the ball. How much is the cost of the ball? Our intuition wants to say, 10 cents, but that’s wrong. If the ball is 10 cents, and the bat is $1 more, the bat would be $1.10, which would make the total $1.20. The correct answer is that the ball is 5 cents and the bat is $1.05, bringing the total to $1.10.

How to balance AI and intuition in a corporate setting

The question should not be whether rational reasoning and AI are better than intuitive decision-making. The question is rather how both approaches can be best combined for optimal results. We tend to undervalue the role intuition plays in decision-making, especially at high levels of leadership. Rather than trying to value one over the other, one can combine insights from both – AI and intuition – to make decisions. Here are some suggestions how to do it:

- Intuition and AI can exist in harmony, especially if companies actively create teams that combine people from diverse schools of thought and where team-members and managers respect each other’s insights. Teams that are echo chambers for one way of thinking lead to a restrictive groupthink, hindering creativity.

- Intuition can be used to develop a theory and test it as a hypothesis with AI. An educated guess fuelled by intuition can point in the direction of a potentially remarkable discovery. Once you have a theory, you can put it to the test with various data-sets and deep-learning algorithms to arrive at the optimal decision.

- Cultivating intuition does not just rely on your brain’s ability to detect patterns. It also requires empathy, a skill that can be a big competitive advantage. Empathy allows you to observe a problem, see how it affects others, and determine how you can fix it from a deeper knowing of what drives human needs and desires.

- As digital transformation provides corporate knowledge to everybody engaged in the corporations’ success, collective intelligence becomes one of the key assets. To manage this resource successfully requires a high degree of intuition, sensing the ‘mental state’ of the corporation to respond to potential risks and rewards.

Thus, for every situation that involves a decision, consider whether your intuition has correctly assessed the situation. Is it an evolutionary old or new situation? Do you have experience or expertise in this type of situation? If not, then rely on analytic thinking. Otherwise feel free to trust your intuitive thinking.

Implementing Human-like Intuition in AI

For decades, AI capable of applying common sense, has been one of the most difficult research challenges in the field—artificial intelligence that ‘understands’ the function of things in the real world and the relationship between them and is able to infer intent, causality, and meaning. AI has made astonishing advances over the years, but the bulk of AI currently deployed is based on statistical machine learning that requires tons of training data to build a statistical model, for example to recognize images to differentiate between cats and dogs. One of the things that such statistical models lack is any understanding of how objects behave—for example that dogs are animals that sometimes chase cats. Current algorithms and neural models cannot match the results of human intuition. Most of these models are logic driven and are time dependent. They lack the ability to give consistently accurate results. When information is not sufficient for drawing any conclusion, the logic process simply gets stuck.

Infants learn through interacting with the real world, which appears to be training various intuitive engines or simulators inside the brain without the need for massive training data. Researchers at MIT led by Josh Tenenbaum hypothesize that our brains have what you might call intuitive physics or intuitive social engines. The information that we are able to gather through our senses is imprecise, but we nonetheless make an inference about what we think will probably happen, with the consequence that – as an example – we intuitively prevent a cup of coffee falling over the edge of a table. In addition, infants from birth exhibit a social engine that recognizes faces, tracks gazes, and tries to understand how other social objects in the world think, behave, and interact with them and each other. In Tenenbaum’s view the brain is set up to develop certain concepts, and that in order to appreciate human intelligence fully, it is necessary to model the core knowledge of very young children. This includes intuition about both physics and sociology—in particular, our ability to interpret other people’s actions in terms of their mental states, beliefs, and objectives.

Conclusion

To advance AI, we need a much better understanding of humans, both in respect to intelligence as well as intuition. This clearly stipulates that neuroscience and behavioural science are required to advance AI. MIT’s Quest of Intelligence, announced in March 2018, aims to complement AI with true intelligence and intuition. In MIT’s view the Institute’s longstanding culture of collaboration will encourage life scientists, computer scientists, social scientists, and engineers to join forces to investigate the societal implications of their work as they pursue hard problems beyond the current horizon of intelligence research, contemplating ideas such as:

- Imagine if the next breakthrough in artificial intelligence came from the root of intelligence itself: the human brain.

- Imagine if artificial intelligence was socially and emotionally intelligent enough to empower everyone to flourish—from individuals to societies.

- Imagine if we could build a machine that grows into intelligence the way a person does—that starts like a baby and learns like a child.

Time will tell if this positive attitude will prevail to counterbalance the potential of AI-inflicted human degradation with unforeseen consequences and social unrest.

These fantasies scare me. I do not want to interact with a machine that is socially and emotionally intelligent to “empower everyone to flourish” – the promises are always great, what actually happens is not so great….If I imagine that we could build a machine that grows into intelligence the way a person does – who does the programming with what bias? Are the programmers and developers fully aware of all possible implications, or do they just fantasize about all the wonderful new possibilities, “the brave new world”? Does MIT also engage specialists in ethics when developing these new AI?

Hi Katherine. I read the article and then read your post and you do bring up valid concerns. When you state, ‘I do not want to interact with a machine that is socially and emotionally intelligent to “empower everyone to flourish” – the promises are always great, what actually happens is not so great….” I know you surely cross reference the massive failures across all of humanity regarding the exact same thing. Without using machines. Or think of an unscrupulous business person in ancient China using an abacus in a manner that deceives their customer. Is it the machine that has failed, or is it a failure of morals and ethics on behalf of the business person?

I realize that is a far cry from an advanced AI system, but baked in bias will have to be over come you are correct. I believe the fear of AI being constantly programmed by dark overlords, mean white people, Latin gangsters, or whoever is your targeted “feared” audience is in itself a form of bias. Why do we automatically presume that y happens just because x happened in the past?

There are plenty of legacy networks and systems that are indeed hard coded with lineage of bias, and I believe the author infers from the get go that this type of thing cannot be allowed. I would envision a system that gives equal categorical weight to all forms and colors and identities of humanity giving no preference to one or the other but cross references in incredibly fast fashion how any given event might happen and what its ramifications are based on historical information mixed in with not only math and science but philosophy and the arts and humanities. One of the most important necessity is some from of globally recognized “oracles” that ML, AI and things of this nature can “dip” that are widely held and agreed upon. Imagine a database that has been agreed on by not only men and women, but trans and hetero and gay and represents all. So then it could be said the training information was completely developed and had consensus by all.

The training phase of an AI system is where you might be thinking of it having baked in bias, but I think it fair to assume that you already have bias baked into your humanity and you are not AI.

So then the question becomes how do we take bias out of humanity?