Budgeting 2021 Picture Credit: Consilium.europa.eu

Budgeting 2021 Picture Credit: Consilium.europa.eu

Introduction: What is Common-Sense?

It was at the beginning of the 18th century that this old philosophical term first acquired its modern meaning. Common-sense became the basis for modern thinking in contrast to metaphysics which was practised during the middle ages. Today, common-sense and how it should best be applied, remains linked to many topics in ethics or the philosophy of modern social sciences. Common-sense represents all the background knowledge we have about the physical and social world absorbed during our lives. It includes such things as our understanding of basic physics, (causality, gravity, temperature) as well as our expectations about how humans behave. Common-sense reasoning is one of the branches of artificial intelligence (AI) that is concerned with simulating the human capacity on making assumptions about purpose, intentions and behaviour of people and objects, as well as possible outcomes of their actions and interactions in decision-making. Common-sense is indeed very common. However, we all have a different idea of what it is. In many ways it is more related to judging than thinking. As the psychologist and Nobel Laureate Daniel Kahneman notes in his book ‘Thinking Fast and Slow’, when we arrive at conclusions based on common-sense, the outcomes feel true, regardless of whether this is correct. We are psychologically not well equipped in judging our own thinking.

Problems integrating

Common-Sense Reasoning in AI

AI researchers, so far, have not been successful in giving intelligent machines the common-sense knowledge they need to reason about the world. Without this knowledge, it is impossible to truly interact with the world. Traditionally, there have been two unsuccessful approaches in getting computers to reason: Symbolic Reasoning and Deep Learning.

Symbolic Reasoning

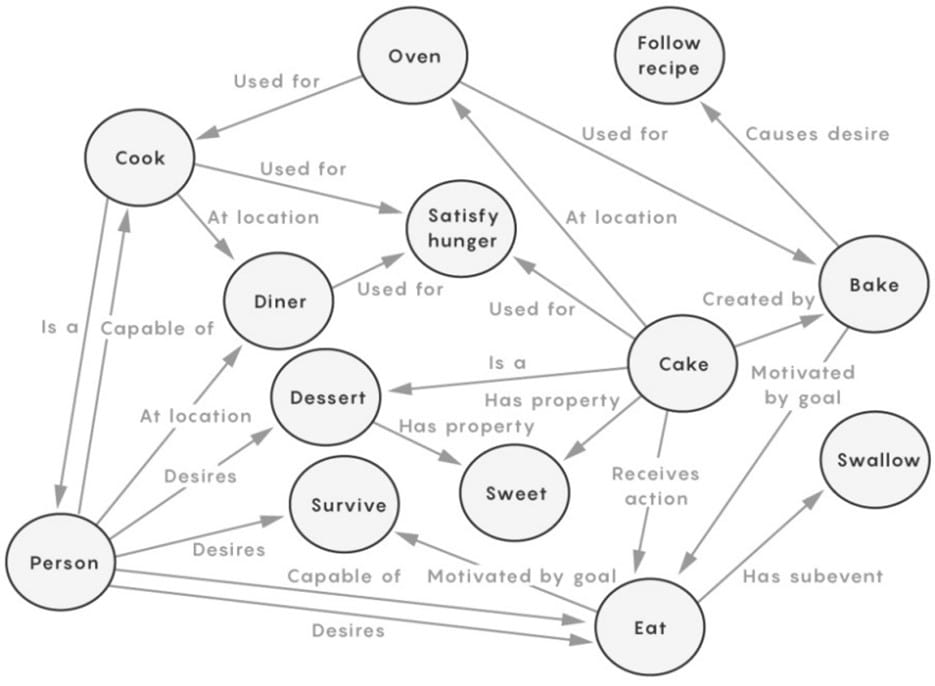

This first attempt in AI was to program rules for common sense. Although this led to some success with rules-based expert systems, this approach has not succeeded. Starting in 1984, a project called ‘Cyc ‘ was designed to capture common-sense knowledge through a knowledge data-base and to map relationships among that knowledge. Rodney Brooks, MIT Professor and AI-entrepreneur, made the following comment about Cyc: “While it has been a heroic effort, it has not led to an AI-system being able to master even a simple understanding of the world.” The basic problem is that language is fuzzy. For example, the word bass can mean a type of fish, a low frequency tone, a type of instrument, or names of people and places. Semantic Networks apply symbolic reasoning to tackle this fuzziness problem. An example of such a network is Concept Net. It is based on crowd-sourced knowledge where people can enter what they consider to be common-sense knowledge. The following diagram provides an example how Concept Net network defines the word “cake”:

The problem of this approach is that information needed to draw conclusions is not represented by the network. A cake can be a snack as well as a dessert, or it may or may not satisfy hunger. You might eat the cake because you want something sweet. It is not likely, although theoretically possible, for a person to eat a cake in the location of an oven, especially if it is hot.

Deep Learning

Deep Learning has achieved more success than methods based on symbolic reasoning as documented by the following examples:

- a) Alpha Go, applied in playing board games like the ancient game of Go, combines two deep neural networks. The policy network predicts the next move and is used to narrow the search so that only the moves most likely to lead to a win are considered. The value network reduces the depth of the search tree by estimating the winner in each position instead of searching to the end of the game. Since the policy network suggests intelligent possible moves and the value network evaluates the current position, Alpha Go can choose the move based on the most successful simulation. However, Alpha Go and similar approaches are incapable of dealing with unforeseen events, which reduces their application potential for other problem-solving tasks.

- b) Generative Pre-Trained Transformer (GPT) analyses language using deep learning to deal with the issues of ‘fuzziness’. GPT-models are pre-trained and use a statistical model of language expressed in millions or billions of parameters in a neural network. If they are fine-tuned for a specific task, such as answering questions or paraphrasing text, they can give the impression that they appear to understand what they are reading.

- c) Bidirectional Encoder Representations from Transformers (BERT) is a neural network that tries to understand written language. It represents a natural language processing (NLP) algorithm that uses a neural net to create pre-trained models. Unlike other algorithms, BERT is bidirectional. Google, who developed BERT, claims that tests such as the ‘Stanford question-answering-data-set Test’, are passed with above human-level accuracy.

New attempts to model Common-Sense Reasoning

Modelling common-sense with AI is the goal of COMET (Common Sense Transformers). The project is an attempt to combine the approaches of symbolic reasoning with the neural network language model. Introduced by Yejin Choi from the Allen Institute in 2019, the idea is to give the language model additional training from a common-sense knowledge base. The language model could then generate conclusions based on common-sense just like a generative network could learn how to generate text. Choi and her colleagues fine-tuned a neural language model with common-sense knowledge from a knowledge base called Atomic. The key idea is to enhance language with visual perceptions or embodied sensations. Presumably, humans were using common-sense to understand the world before they were communicating, hence the idea is to teach intelligent machines to interact with the world the way a child does. Hence, Choi and her colleagues are augmenting COMET

with labelled pictures, hoping to have a neural network that could learn from knowledge bases without human supervision.

Common-sense is considered the holy grail towards the goal of reaching human-level AI. While, so far, AI has not succeeded to overcome this barrier, there are indications that AI will eventually support various decision-making situations, applying different models in matching different problems to be resolved.

Common-Sense vs. Human Intelligence

In his book ‘What Intelligence Tests Miss’ published in 2009, Keith Stanovich, Professor Emeritus of Applied Psychology and Human Development at the University of Toronto, offers a way to understand the difference between intelligence and common-sense. Stanovich argues that there are really three components in our cognitive ability that distinguish common-sense from intelligence:

First, there is what Stanovich calls the autonomous mind. It activates your thinking based on simple associations; It allows you to do what you have always done in the past, and in fact to feel as though you are on autopilot, because the autonomous mind operates very rapidly and effortlessly. For example, when you face 60 types of bread at the supermarket and simply buy the bread that you usually buy, you are using the autonomous mind. If the bread you usually buy is out of stock, you will be forced to use the algorithmic mind. The algorithmic mind processes information, juggling concepts in the brain’s working memory, making comparisons among them, combining them in different ways, and so forth. Thus, you might examine different brands of a bread to determine which is most like the one you usually buy in terms of cost and nutritional content. Intelligence is an indicator of the algorithmic mind, it lacks, however, reflection. The reflective mind refers to the goals of the problem to be solved as well as beliefs relevant to this problem and the selection of actions in trying to get to these goals. Stanovich stresses that this tendency to think through a problem and not use the answer provided by the autonomous mind is only weakly related to intelligence. Even though both the algorithmic and the reflective mind are important in tasks we associate with intelligence, we only consider functions of the algorithmic mind as ‘intelligent’ which is all that IQ-tests measure.

Heuristics: From Common-Sense to Decision-Making

Related to common-sense, a heuristic is defined as a mental shortcut that allows people to solve problems and make judgments quickly and efficiently. These rule-of-thumb strategies shorten decision-making time and allow people to function without constantly thinking about their next course of action. Heuristics are helpful in many situations, but they can also lead to cognitive biases. It was during the 1950s that the Nobel-prize winning psychologist Herbert Simon suggested that while people strive to make rational choices, human judgment is subject to cognitive limitations. In order to cope with the tremendous amount of information we encounter and to speed up the decision-making process, the brain relies on mental strategies to simplify things, so we don’t have to spend endless amounts of time analysing every detail. These strategies can be classified as follows:

The availability heuristic involves making decisions based upon how easy it is to bring something to mind. When you are trying to make a decision, you might quickly remember a number of relevant examples. Since these are more readily available in your memory, you will likely judge these outcomes as being more common.

The representativeness heuristic involves making a decision by comparing the present situation to the most representative mental prototype. When you are trying to decide if someone is trustworthy, you might compare aspects of the individual to other mental examples you hold.

The affect heuristic involves making choices that are influenced by the emotions that an individual is experiencing at that moment. For example, research has shown that people are more likely to see decisions as having benefits and lower risks when they are in a positive mood. Negative emotions, on the other hand, lead people to focus on the potential downsides of a decision.

While heuristics can speed up our decision-making process, they can introduce errors. Just because something has worked in the past does not mean that it will work again and relying on an existing heuristic can make it difficult to see alternative solutions or to come up with new ideas.

AI can overcome the weaknesses of Heuristics in Decision-Making

Common-sense is not a science. Nowhere is this more evident than in the worlds of quantum mechanics and relativity in which our common-sense intuitions are hopelessly inadequate to deal with quantum unpredictability and space-time distortions. In science the highest unit of cognition is not the individual, it is the community of scientific enquiry. Following this path, research about the biological functionality of the human brain is increasingly taking the lead in AI-development. This, combined with the ongoing exponential growth of the computational capacity of intelligent machines and the processing of huge volumes of data, will lead to powerful tools supporting the process for better decision-making. While Artificial General Intelligence (AGI), providing general problem-solving capability still has a long way to go, AI-tools similar to apps will augment and improve heuristic concepts in the years to come.

As you have mentioned in your introduction and as I said before AI can be very useful to SUPPORT our decision making, not doing it for us…. Every animal has common sense because it is learning this through the experience of the physical body, dogs, which we can observe very well, even have very good grasp of physical laws…….

Wishing you and all of us that AI will be an ever improving servant and not become the master in the coming new year and our future… with best wishes, Katharina